Randomized Reactive Redundancy for Byzantine Fault-Tolerance in Parallelized Learning

Paper and Code

Dec 19, 2019

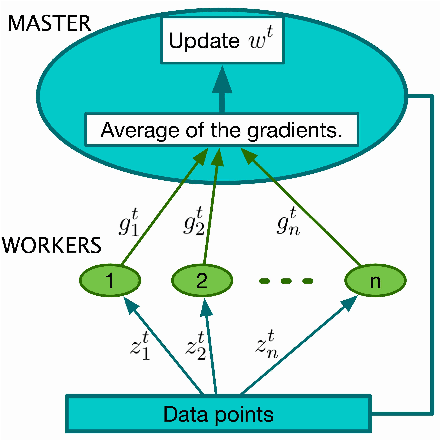

This report considers the problem of Byzantine fault-tolerance in synchronous parallelized learning that is founded on the parallelized stochastic gradient descent (parallelized-SGD) algorithm. The system comprises a master, and $n$ workers, where up to $f$ of the workers are Byzantine faulty. Byzantine workers need not follow the master's instructions correctly, and might send malicious incorrect (or faulty) information. The identity of the Byzantine workers remains fixed throughout the learning process, and is unknown a priori to the master. We propose two coding schemes, a deterministic scheme and a randomized scheme, for guaranteeing exact fault-tolerance if $2f < n$. The coding schemes use the concept of reactive redundancy for isolating Byzantine workers that eventually send faulty information. We note that the computation efficiencies of the schemes compare favorably with other (deterministic or randomized) coding schemes, for exact fault-tolerance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge