Random Planted Forest: a directly interpretable tree ensemble

Paper and Code

Dec 29, 2020

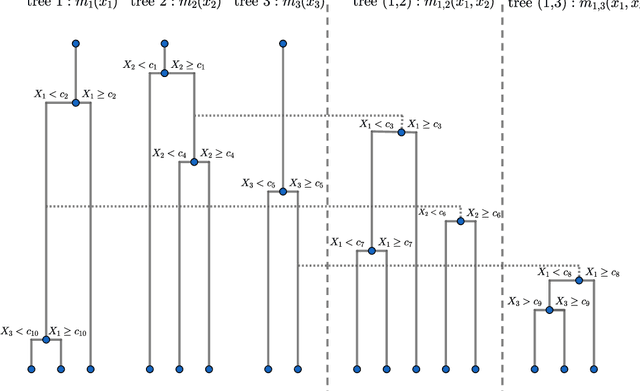

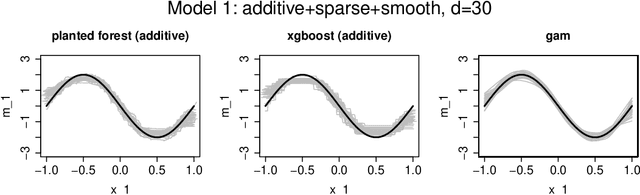

We introduce a novel interpretable and tree-based algorithm for prediction in a regression setting in which each tree in a classical random forest is replaced by a family of planted trees that grow simultaneously. The motivation for our algorithm is to estimate the unknown regression function from a functional ANOVA decomposition perspective, where each tree corresponds to a function within that decomposition. Therefore, planted trees are limited in the number of interaction terms. The maximal order of approximation in the ANOVA decomposition can be specified or left unlimited. If a first order approximation is chosen, the result is an additive model. In the other extreme case, if the order of approximation is not limited, the resulting model puts no restrictions on the form of the regression function. In a simulation study we find encouraging prediction and visualisation properties of our random planted forest method. We also develop theory for an idealised version of random planted forests in the case of an underlying additive model. We show that in the additive case, the idealised version achieves up to a logarithmic factor asymptotically optimal one-dimensional convergence rates of order $n^{-2/5}$.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge