RadarPillars: Efficient Object Detection from 4D Radar Point Clouds

Paper and Code

Aug 09, 2024

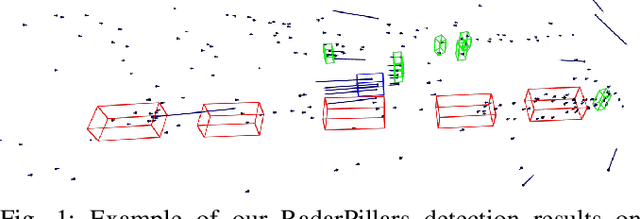

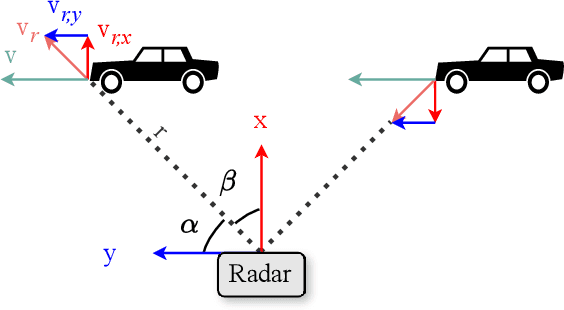

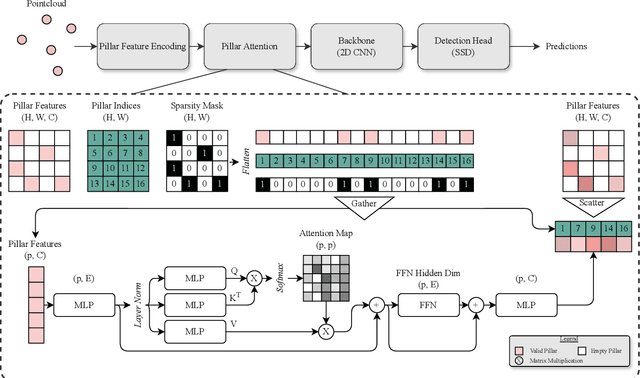

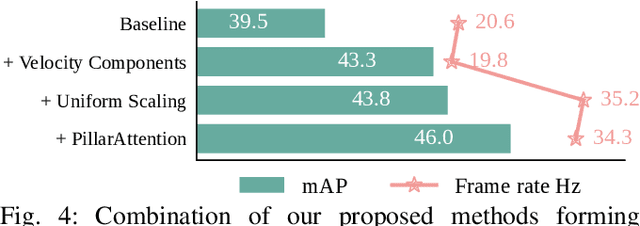

Automotive radar systems have evolved to provide not only range, azimuth and Doppler velocity, but also elevation data. This additional dimension allows for the representation of 4D radar as a 3D point cloud. As a result, existing deep learning methods for 3D object detection, which were initially developed for LiDAR data, are often applied to these radar point clouds. However, this neglects the special characteristics of 4D radar data, such as the extreme sparsity and the optimal utilization of velocity information. To address these gaps in the state-of-the-art, we present RadarPillars, a pillar-based object detection network. By decomposing radial velocity data, introducing PillarAttention for efficient feature extraction, and studying layer scaling to accommodate radar sparsity, RadarPillars significantly outperform state-of-the-art detection results on the View-of-Delft dataset. Importantly, this comes at a significantly reduced parameter count, surpassing existing methods in terms of efficiency and enabling real-time performance on edge devices.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge