Quantum-Inspired Support Vector Machine

Paper and Code

Jul 30, 2019

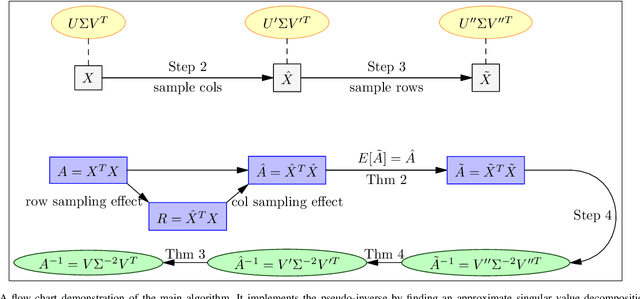

Support vector machine (SVM) is a particularly powerful and flexible supervised learning model that analyze data for both classification and regression, whose usual algorithm complexity scales polynomially with the dimension of data space and the number of data points. Inspired by quantum SVM, we present a quantum-inspired classical algorithm for SVM using fast sampling techniques. In our approach, we developed a method sampling kernel matrix by the given information on data points and make classification through estimation of classification expression. Our approach can be applied to various types of SVM, such as linear SVM, non-linear SVM and soft SVM. Theoretical analysis shows one can make classification with arbitrary success probability in logarithmic runtime of both the dimension of data space and the number of data points, matching the runtime of the quantum SVM.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge