QR-MIX: Distributional Value Function Factorisation for Cooperative Multi-Agent Reinforcement Learning

Paper and Code

Sep 28, 2020

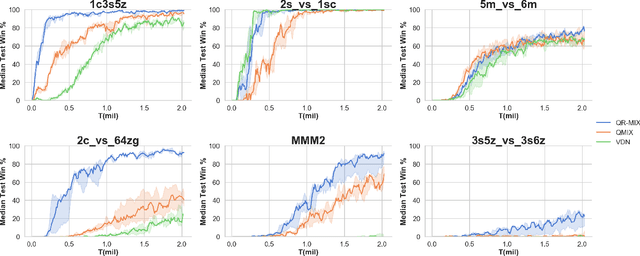

In Cooperative Multi-Agent Reinforcement Learning (MARL) and under the setting of Centralized Training with Decentralized Execution (CTDE), agents observe and interact with their environment locally and independently. With local observation and random sampling, the randomness in rewards and observations leads to randomness in long-term returns. Existing methods such as Value Decomposition Network (VDN) and QMIX estimate the mean value of long-term returns while ignoring randomness. Our proposed model QR-MIX introduces quantile regression, modeling joint state-action values as a distribution, combining QMIX with Implicit Quantile Network (IQN). Besides, because the monotonicity in QMIX limits the expression of joint state-action value distribution and may lead to incorrect estimation results in nonmonotonic cases, we design a flexible loss function to replace the absolute weights found in QMIX. Our methods enhance the expressiveness of our mixing network and are more tolerant of randomness and nonmonotonicity. The experiments demonstrate that QR-MIX outperforms prior works in the StarCraft Multi-Agent Challenge (SMAC) environment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge