Pyramid-Driven Alignment: Pyramid Principle Guided Integration of Large Language Models and Knowledge Graphs

Paper and Code

Oct 17, 2024

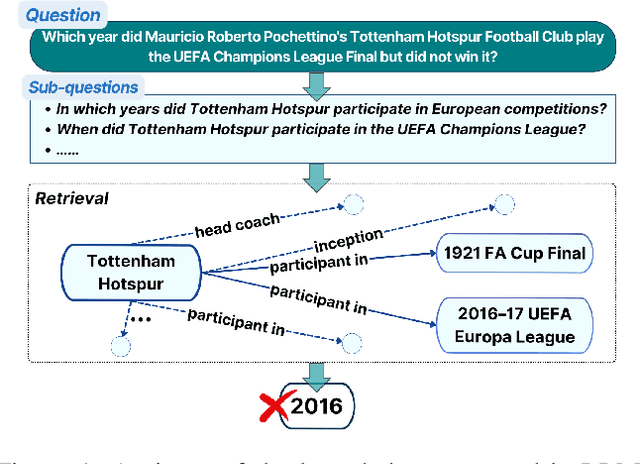

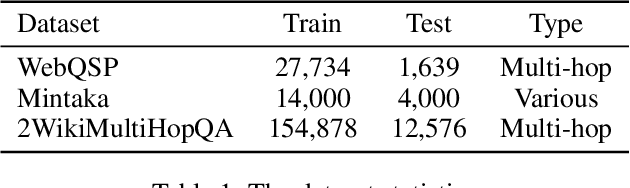

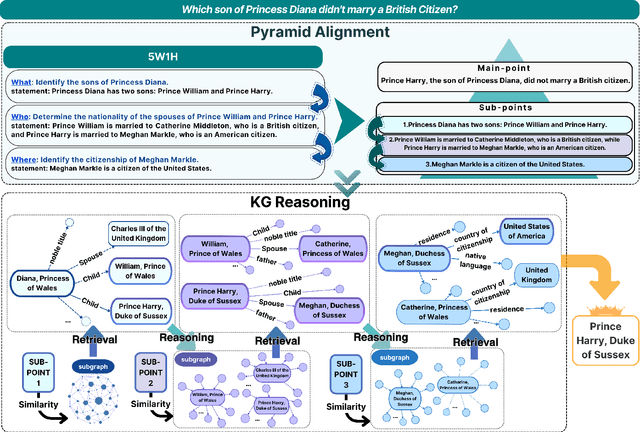

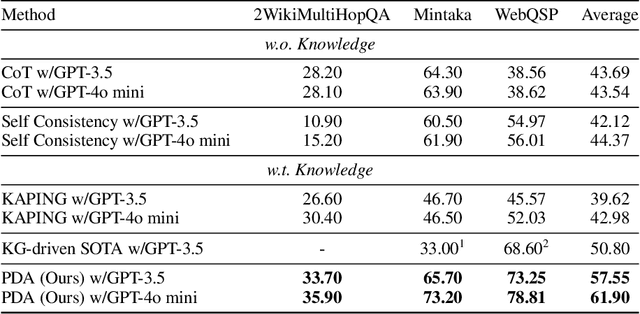

Large Language Models (LLMs) possess impressive reasoning abilities but are prone to generating incorrect information, often referred to as hallucinations. While incorporating external Knowledge Graphs (KGs) can partially mitigate this issue, existing methods primarily treat KGs as static knowledge repositories, overlooking the critical disparity between KG and LLM knowledge, and failing to fully exploit the reasoning capabilities inherent in KGs. To address these limitations, we propose Pyramid-Driven Alignment (PDA), a novel framework for seamlessly integrating LLMs with KGs. PDA utilizes Pyramid Principle analysis to construct a hierarchical pyramid structure. This structure is designed to reflect the input question and generate more validated deductive knowledge, thereby enhancing the alignment of LLMs and KGs and ensuring more cohesive integration. Furthermore, PDA employs a recursive mechanism to harness the underlying reasoning abilities of KGs, resulting in more accurate knowledge retrieval for question-answering tasks. Our experimental results reveal a substantial performance advantage of PDA over state-of-the-art baselines, with improvements reaching 26.70% and 26.78%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge