Putting RDF2vec in Order

Paper and Code

Aug 11, 2021

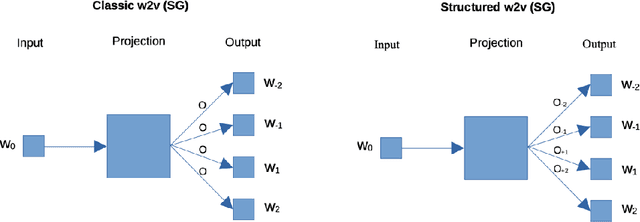

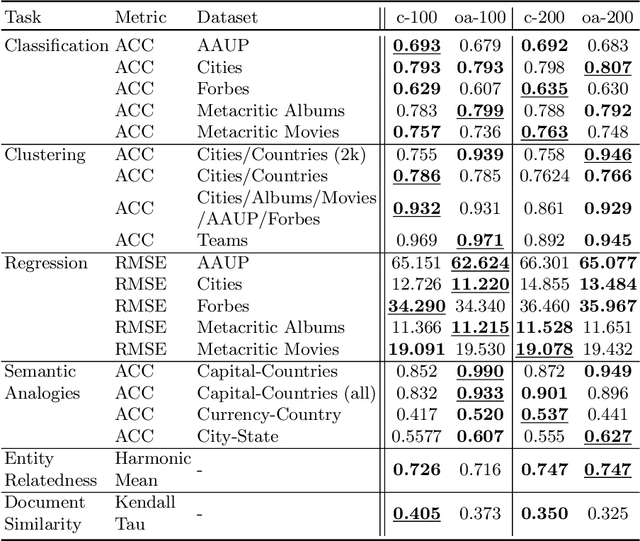

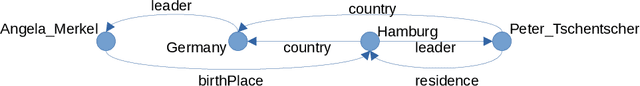

The RDF2vec method for creating node embeddings on knowledge graphs is based on word2vec, which, in turn, is agnostic towards the position of context words. In this paper, we argue that this might be a shortcoming when training RDF2vec, and show that using a word2vec variant which respects order yields considerable performance gains especially on tasks where entities of different classes are involved.

* Accepted at the ISWC 2021 posters and demos track

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge