Prototype Refinement Network for Few-Shot Segmentation

Paper and Code

Feb 10, 2020

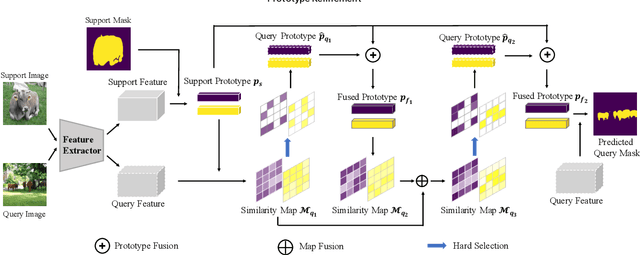

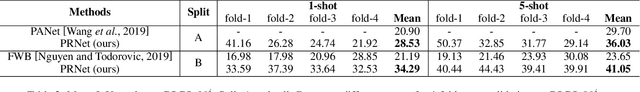

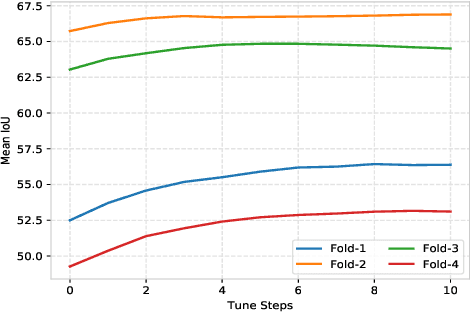

Few-shot segmentation targets to segment new classes with few annotated images provided. It is more challenging than traditional semantic segmentation tasks that segment pre-defined classes with abundant annotated data. In this paper, we propose Prototype Refinement Network (PRNet) to attack the challenge of few-shot segmentation. PRNet learns to bidirectionally extract prototypes from both support and query images, which is different from existing methods. To extract representative prototypes of the new classes, we use adaptation and fusion for prototype refinement. The adaptation of PRNet is implemented by fine-tuning on the support set. Furthermore, prototype fusion is adopted to fuse support prototypes with query prototypes, incorporating the knowledge from both sides. Refined in this way, the prototypes become more discriminative in low-data regimes. Experiments on PASAL-$5^i$ and COCO-$20^i$ demonstrate the superiority of our method. Especially on COCO-$20^i$, PRNet significantly outperforms previous methods by a large margin of 13.1% in 1-shot setting and 17.4% in 5-shot setting respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge