Projected Belief Networks With Discriminative Alignment for Acoustic Event Classification: Rivaling State of the Art CNNs

Paper and Code

Jan 20, 2024

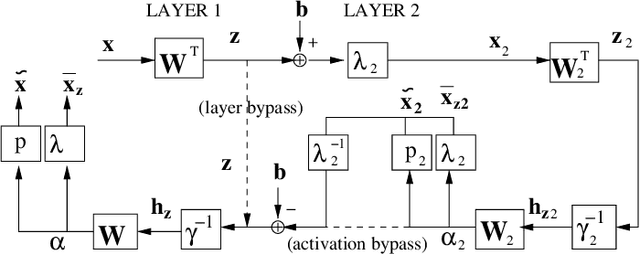

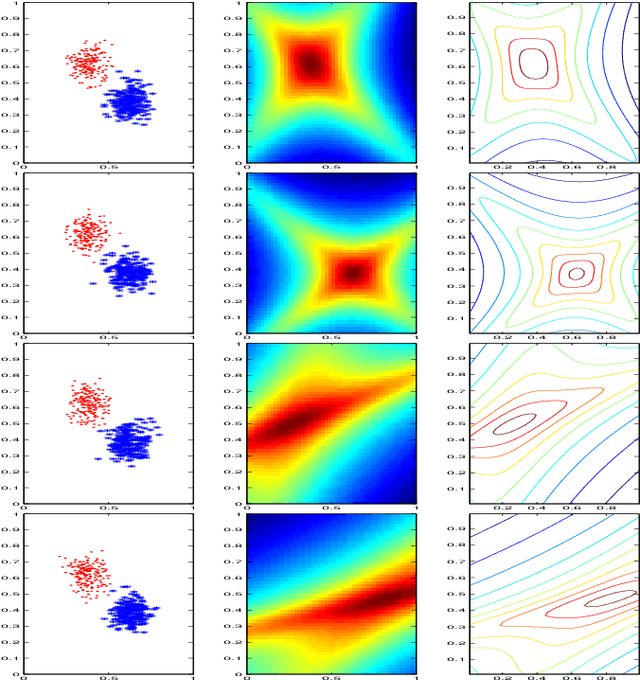

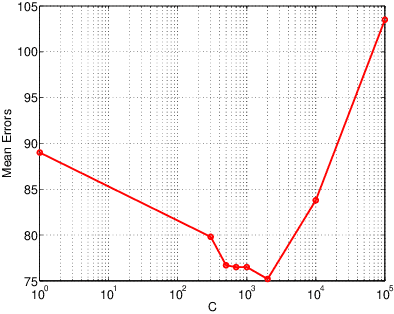

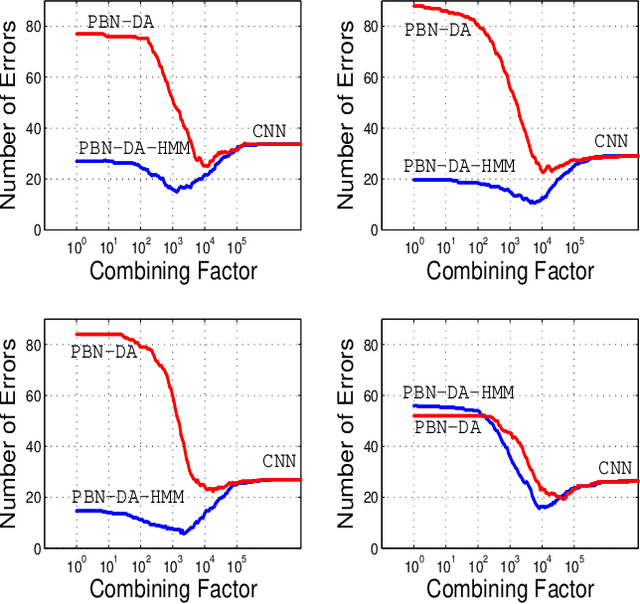

The projected belief network (PBN) is a generative stochastic network with tractable likelihood function based on a feed-forward neural network (FFNN). The generative function operates by "backing up" through the FFNN. The PBN is two networks in one, a FFNN that operates in the forward direction, and a generative network that operates in the backward direction. Both networks co-exist based on the same parameter set, have their own cost functions, and can be separately or jointly trained. The PBN therefore has the potential to possess the best qualities of both discriminative and generative classifiers. To realize this potential, a separate PBN is trained on each class, maximizing the generative likelihood function for the given class, while minimizing the discriminative cost for the FFNN against "all other classes". This technique, called discriminative alignment (PBN-DA), aligns the contours of the likelihood function to the decision boundaries and attains vastly improved classification performance, rivaling that of state of the art discriminative networks. The method may be further improved using a hidden Markov model (HMM) as a component of the PBN, called PBN-DA-HMM. This paper provides a comprehensive treatment of PBN, PBN-DA, and PBN-DA-HMM. In addition, the results of two new classification experiments are provided. The first experiment uses air-acoustic events, and the second uses underwater acoustic data consisting of marine mammal calls. In both experiments, PBN-DA-HMM attains comparable or better performance as a state of the art CNN, and attain a factor of two error reduction when combined with the CNN.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge