Probing TryOnGAN

Paper and Code

Jan 05, 2022

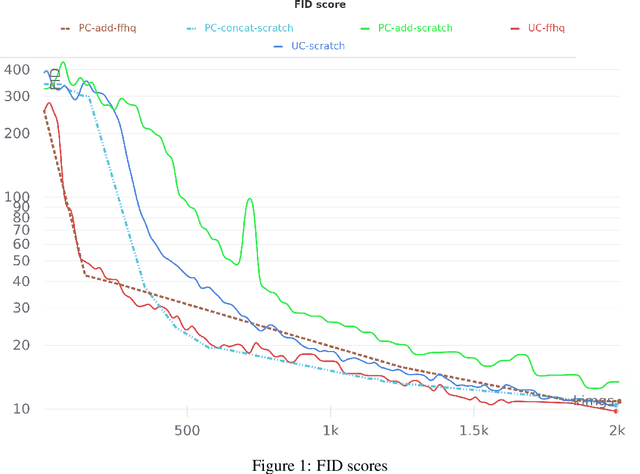

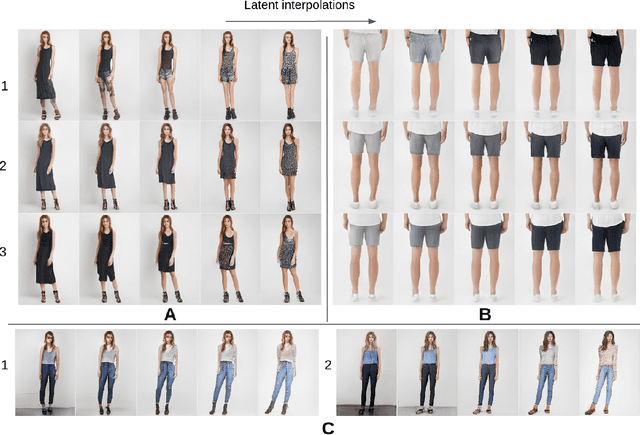

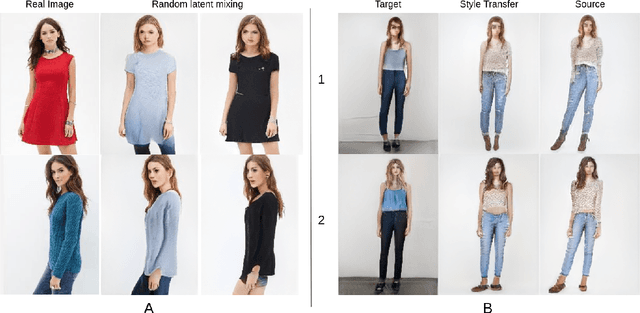

TryOnGAN is a recent virtual try-on approach, which generates highly realistic images and outperforms most previous approaches. In this article, we reproduce the TryOnGAN implementation and probe it along diverse angles: impact of transfer learning, variants of conditioning image generation with poses and properties of latent space interpolation. Some of these facets have never been explored in literature earlier. We find that transfer helps training initially but gains are lost as models train longer and pose conditioning via concatenation performs better. The latent space self-disentangles the pose and the style features and enables style transfer across poses. Our code and models are available in open source.

* 5 pages, to appear in the proceedings of the 9th ACM IKDD CODS and

27th COMAD (CODS-COMAD '22)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge