Private Learning on Networks

Paper and Code

Dec 15, 2016

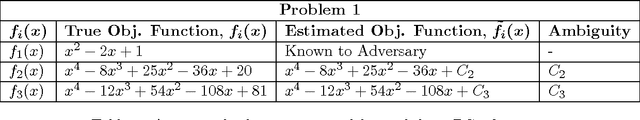

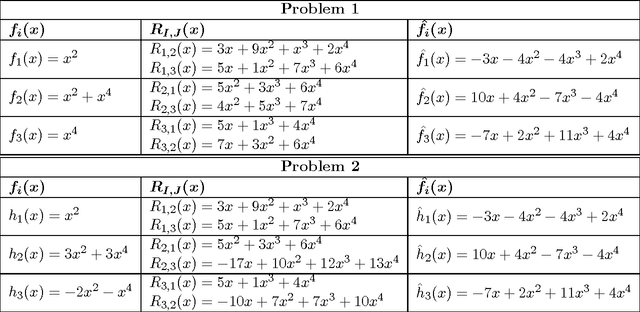

Continual data collection and widespread deployment of machine learning algorithms, particularly the distributed variants, have raised new privacy challenges. In a distributed machine learning scenario, the dataset is stored among several machines and they solve a distributed optimization problem to collectively learn the underlying model. We present a secure multi-party computation inspired privacy preserving distributed algorithm for optimizing a convex function consisting of several possibly non-convex functions. Each individual objective function is privately stored with an agent while the agents communicate model parameters with neighbor machines connected in a network. We show that our algorithm can correctly optimize the overall objective function and learn the underlying model accurately. We further prove that under a vertex connectivity condition on the topology, our algorithm preserves privacy of individual objective functions. We establish limits on the what a coalition of adversaries can learn by observing the messages and states shared over a network.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge