Private Collaborative Edge Inference via Over-the-Air Computation

Paper and Code

Jul 30, 2024

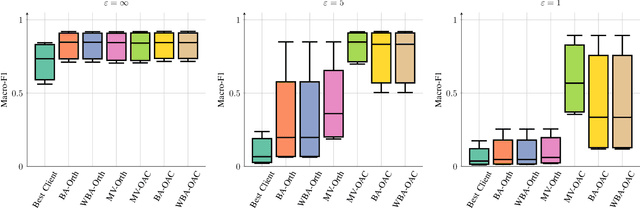

We consider collaborative inference at the wireless edge, where each client's model is trained independently on their local datasets. Clients are queried in parallel to make an accurate decision collaboratively. In addition to maximizing the inference accuracy, we also want to ensure the privacy of local models. To this end, we leverage the superposition property of the multiple access channel to implement bandwidth-efficient multi-user inference methods. Specifically, we propose different methods for ensemble and multi-view classification that exploit over-the-air computation. We show that these schemes perform better than their orthogonal counterparts with statistically significant differences while using fewer resources and providing privacy guarantees. We also provide experimental results verifying the benefits of the proposed over-the-air multi-user inference approach and perform an ablation study to demonstrate the effectiveness of our design choices. We share the source code of the framework publicly on Github to facilitate further research and reproducibility.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge