Privacy Preserving Text Recognition with Gradient-Boosting for Federated Learning

Paper and Code

Jul 14, 2020

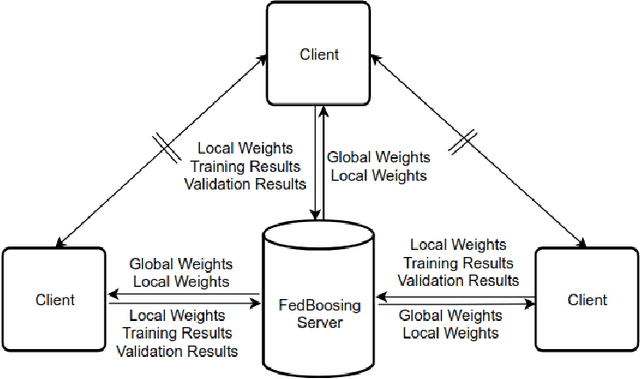

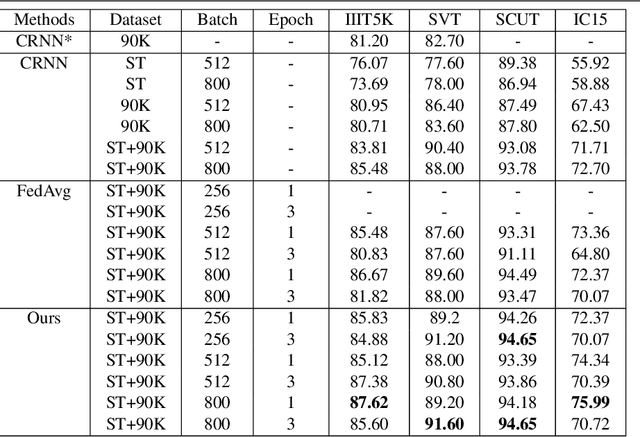

Typical machine learning approaches require centralized data for model training, which may not be possible where restrictions on data sharing are in place due to, for instance, privacy protection. The recently proposed Federated Learning (FL) frame-work allows learning a shared model collaboratively without data being centralized or data sharing among data owners. However, we show in this paper that the generalization ability of the joint model is poor on Non-Independent and Non-Identically Dis-tributed (Non-IID) data, particularly when the Federated Averaging (FedAvg) strategy is used in this collaborative learning framework thanks to the weight divergence phenomenon. We propose a novel boosting algorithm for FL to address this generalisation issue, as well as achieving much faster convergence in gradient based optimization. We demonstrate our Federated Boosting (FedBoost) method on privacy-preserved text recognition, which shows significant improvements in both performance and efficiency. The text images are based on publicly available datasets for fair comparison and we intend to make our implementation public to ensure reproducibility.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge