Principal Fairness for Human and Algorithmic Decision-Making

Paper and Code

Jun 13, 2020

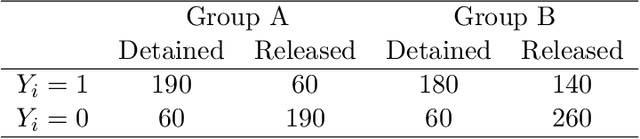

Using the concept of principal stratification from the causal inference literature, we introduce a new notion of fairness, called principal fairness, for human and algorithmic decision-making. The key idea is that one should not discriminate among individuals who would be similarly affected by the decision. Unlike the existing statistical definitions of fairness, principal fairness explicitly accounts for the fact that individuals can be influenced by the decision. We motivate principal fairness by the belief that all people are created equal, implying that the potential outcomes should not depend on protected attributes such as race and gender once we adjust for relevant covariates. Under this assumption, we show that principal fairness implies all three existing statistical fairness criteria, thereby resolving the previously recognized tradeoffs between them. Finally, we discuss how to empirically evaluate the principal fairness of a particular decision.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge