Primal-Dual Optimization Algorithms over Riemannian Manifolds: an Iteration Complexity Analysis

Paper and Code

Oct 05, 2017

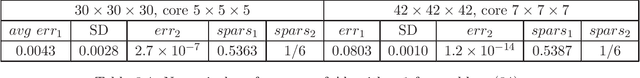

In this paper we study nonconvex and nonsmooth multi-block optimization over Riemannian manifolds with coupled linear constraints. Such optimization problems naturally arise from machine learning, statistical learning, compressive sensing, image processing, and tensor PCA, among others. We develop an ADMM-like primal-dual approach based on decoupled solvable subroutines such as linearized proximal mappings. First, we introduce the optimality conditions for the afore-mentioned optimization models. Then, the notion of $\epsilon$-stationary solutions is introduced as a result. The main part of the paper is to show that the proposed algorithms enjoy an iteration complexity of $O(1/\epsilon^2)$ to reach an $\epsilon$-stationary solution. For prohibitively large-size tensor or machine learning models, we present a sampling-based stochastic algorithm with the same iteration complexity bound in expectation. In case the subproblems are not analytically solvable, a feasible curvilinear line-search variant of the algorithm based on retraction operators is proposed. Finally, we show specifically how the algorithms can be implemented to solve a variety of practical problems such as the NP-hard maximum bisection problem, the $\ell_q$ regularized sparse tensor principal component analysis and the community detection problem. Our preliminary numerical results show great potentials of the proposed methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge