POTs: Protective Optimization Technologies

Paper and Code

Aug 30, 2018

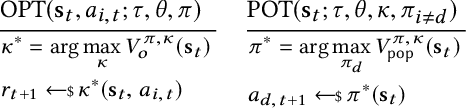

In spite of their many advantages, optimization systems often neglect the economic, ethical, moral, social, and political impact they have on populations and their environments. In this paper we argue that the frameworks through which the discontents of optimization systems have been approached so far cover a narrow subset of these problems by (i) assuming that the system provider has the incentives and means to mitigate the imbalances optimization causes, (ii) disregarding problems that go beyond discrimination due to disparate treatment or impact in algorithmic decision making, and (iii) developing solutions focused on removing algorithmic biases related to discrimination. In response we introduce Protective Optimization Technologies: solutions that enable optimization subjects to defend from unwanted consequences. We provide a framework that formalizes the design space of POTs and show how it differs from other design paradigms in the literature. We show how the framework can capture strategies developed in the wild against real optimization systems, and how it can be used to design, implement, and evaluate a POT that enables individuals and collectives to protect themselves from unbalances in a credit scoring application related to loan allocation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge