Position Paper on Simulating Privacy Dynamics in Recommender Systems

Paper and Code

Sep 14, 2021

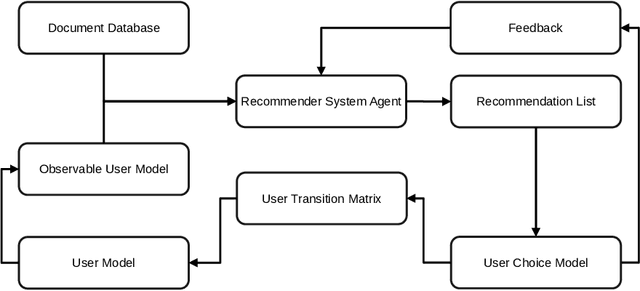

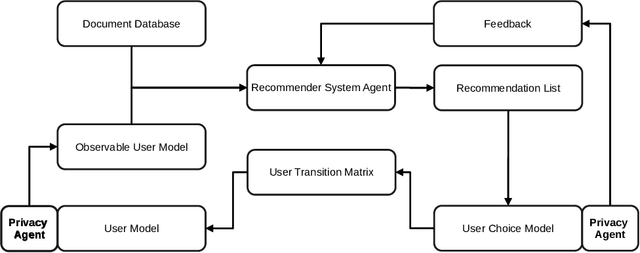

In this position paper, we discuss the merits of simulating privacy dynamics in recommender systems. We study this issue at hand from two perspectives: Firstly, we present a conceptual approach to integrate privacy into recommender system simulations, whose key elements are privacy agents. These agents can enhance users' profiles with different privacy preferences, e.g., their inclination to disclose data to the recommender system. Plus, they can protect users' privacy by guarding all actions that could be a threat to privacy. For example, agents can prohibit a user's privacy-threatening actions or apply privacy-enhancing techniques, e.g., Differential Privacy, to make actions less threatening. Secondly, we identify three critical topics for future research in privacy-aware recommender system simulations: (i) How could we model users' privacy preferences and protect users from performing any privacy-threatening actions? (ii) To what extent do privacy agents modify the users' document preferences? (iii) How do privacy preferences and privacy protections impact recommendations and privacy of others? Our conceptual privacy-aware simulation approach makes it possible to investigate the impact of privacy preferences and privacy protection on the micro-level, i.e., a single user, but also on the macro-level, i.e., all recommender system users. With this work, we hope to present perspectives on how privacy-aware simulations could be realized, such that they enable researchers to study the dynamics of privacy within a recommender system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge