Pop Music Transformer: Generating Music with Rhythm and Harmony

Paper and Code

Feb 01, 2020

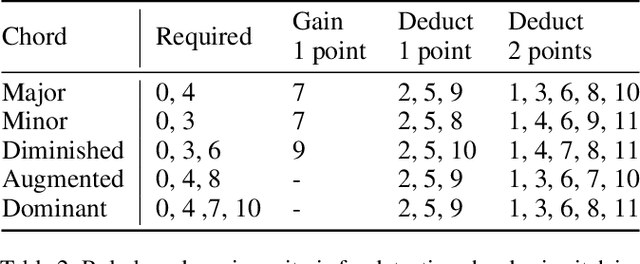

The task automatic music composition entails generative modeling of music in symbolic formats such as the musical scores. By serializing a score as a sequence of MIDI-like events, recent work has demonstrated that state-of-the-art sequence models with self-attention work nicely for this task, especially for composing music with long-range coherence. In this paper, we show that sequence models can do even better when we improve the way a musical score is converted into events. The new event set, dubbed "REMI" (REvamped MIDI-derived events), provides sequence models a metric context for modeling the rhythmic patterns of music, while allowing for local tempo changes. Moreover, it explicitly sets up a harmonic structure and makes chord progression controllable. It also facilitates coordinating different tracks of a musical piece, such as the piano, bass and drums. With this new approach, we build a Pop Music Transformer that composes Pop piano music with a more plausible rhythmic structure than prior arts do. The code, data and pre-trained model are publicly available.\footnote{\url{https://github.com/YatingMusic/remi}}

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge