PlutoNet: An Efficient Polyp Segmentation Network

Paper and Code

Apr 12, 2022

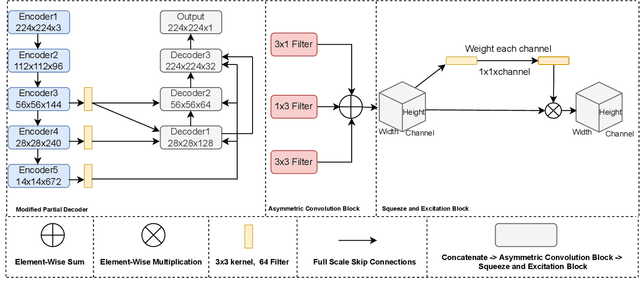

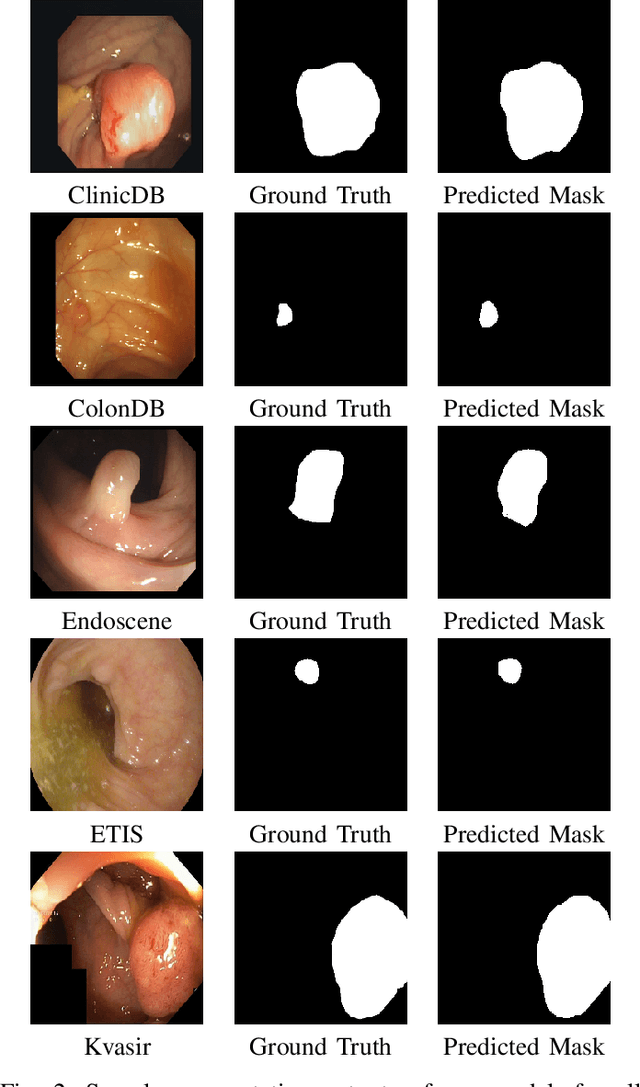

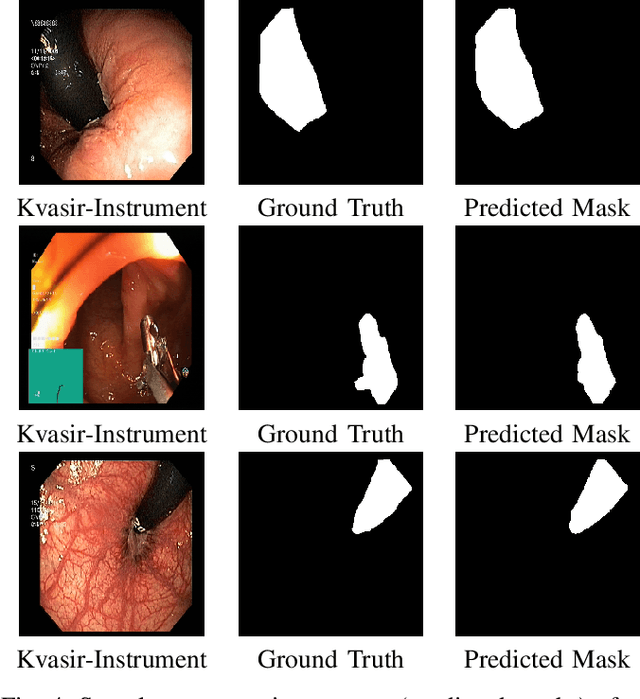

Polyps in the colon can turn into cancerous cells if not removed with early intervention. Deep learning models are used to minimize the number of polyps that goes unnoticed by the experts, and to accurately segment the detected polyps during these interventions. Although these models perform well on these tasks, they require too many parameters, which can pose a problem with real-time applications. To address this problem, we propose a novel segmentation model called PlutoNet which requires only 2,626,337 parameters while outperforming state-of-the-art models on multiple medical image segmentation tasks. We use EfficientNetB0 architecture as a backbone and propose the novel \emph{modified partial decoder}, which is a combination of partial decoder and full scale connections, which further reduces the number of parameters required, as well as captures semantic details. We use asymmetric convolutions to handle varying polyp sizes. Finally, we weight each feature map to improve segmentation by using a squeeze and excitation block. In addition to polyp segmentation in colonoscopy, we tested our model on segmentation of nuclei and surgical instruments to demonstrate its generalizability to different medical image segmentation tasks. Our model outperformed the state-of-the-art models with a Dice score of \%92.3 in CVC-ClinicDB dataset and \%89.3 in EndoScene dataset, a Dice score of \%91.93 on the 2018 Data Science Bowl Challenge dataset, and a Dice score of \%94.8 on Kvasir-Instrument dataset. Our experiments and ablation studies show that our model is superior in terms of accuracy, and it is able generalize well to multiple medical segmentation tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge