PLOT-TAL -- Prompt Learning with Optimal Transport for Few-Shot Temporal Action Localization

Paper and Code

Mar 27, 2024

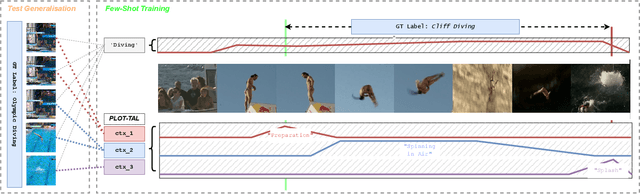

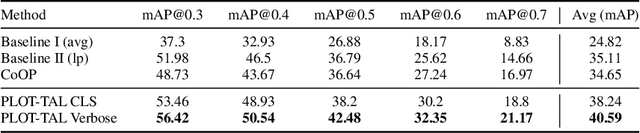

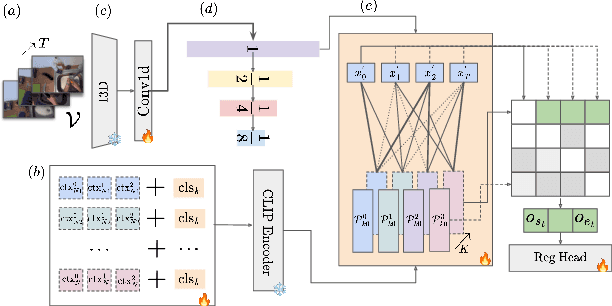

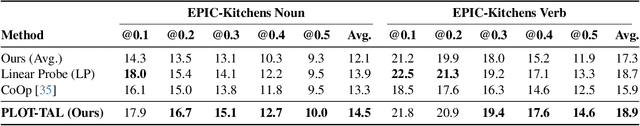

This paper introduces a novel approach to temporal action localization (TAL) in few-shot learning. Our work addresses the inherent limitations of conventional single-prompt learning methods that often lead to overfitting due to the inability to generalize across varying contexts in real-world videos. Recognizing the diversity of camera views, backgrounds, and objects in videos, we propose a multi-prompt learning framework enhanced with optimal transport. This design allows the model to learn a set of diverse prompts for each action, capturing general characteristics more effectively and distributing the representation to mitigate the risk of overfitting. Furthermore, by employing optimal transport theory, we efficiently align these prompts with action features, optimizing for a comprehensive representation that adapts to the multifaceted nature of video data. Our experiments demonstrate significant improvements in action localization accuracy and robustness in few-shot settings on the standard challenging datasets of THUMOS-14 and EpicKitchens100, highlighting the efficacy of our multi-prompt optimal transport approach in overcoming the challenges of conventional few-shot TAL methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge