Plasticine3D: Non-rigid 3D editting with text guidance

Paper and Code

Dec 15, 2023

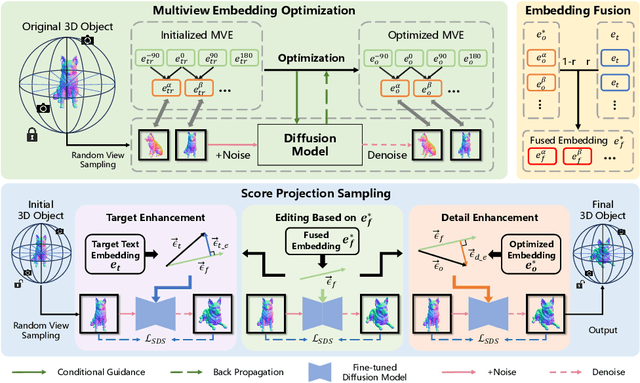

With the help of Score Distillation Sampling(SDS) and the rapid development of various trainable 3D representations, Text-to-Image(T2I) diffusion models have been applied to 3D generation tasks and achieved considerable results. There are also some attempts toward the task of editing 3D objects leveraging this Text-to-3D pipeline. However, most methods currently focus on adding additional geometries, overwriting textures or both. But few of them can perform non-rigid transformation of 3D objects. For those who can perform non-rigid editing, on the other hand, suffer from low-resolution, lack of fidelity and poor flexibility. In order to address these issues, we present: Plasticine3D, a general, high-fidelity, photo-realistic and controllable non-rigid editing pipeline. Firstly, our work divides the editing process into a geometry editing stage and a texture editing stage to achieve more detailed and photo-realistic results ; Secondly, in order to perform non-rigid transformation with controllable results while maintain the fidelity towards original 3D models in the same time, we propose a multi-view-embedding(MVE) optimization strategy to ensure that the diffusion model learns the overall features of the original object and an embedding-fusion(EF) to control the degree of editing by adjusting the value of the fusing rate. We also design a geometry processing step before optimizing on the base geometry to cope with different needs of various editing tasks. Further more, to fully leverage the geometric prior from the original 3D object, we provide an optional replacement of score distillation sampling named score projection sampling(SPS) which enables us to directly perform optimization from the origin 3D mesh in most common median non-rigid editing scenarios. We demonstrate the effectiveness of our method on both the non-rigid 3D editing task and general 3D editing task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge