Planning under Uncertainty to Goal Distributions

Paper and Code

Nov 09, 2020

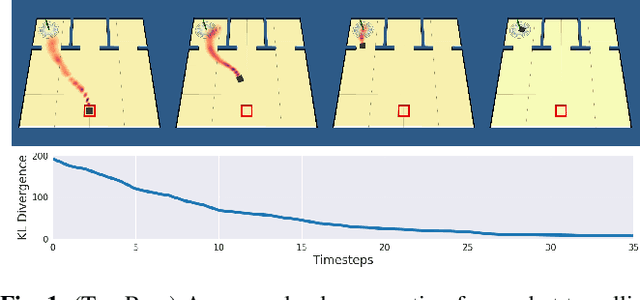

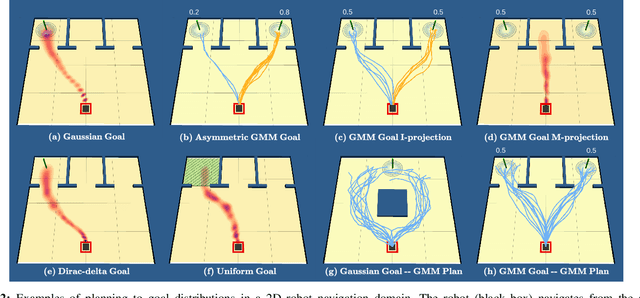

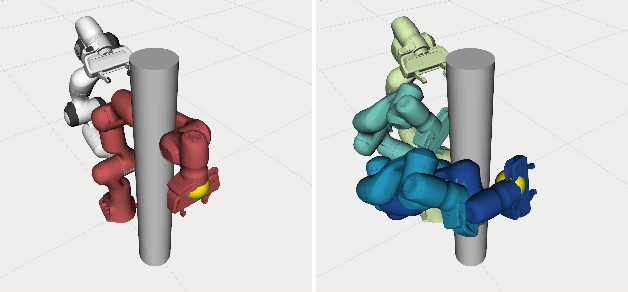

Goal spaces for planning problems are typically conceived of as subsets of the state space. It is common to select a particular goal state to plan to, and the agent monitors its progress to the goal with a distance function defined over the state space. Due to numerical imprecision, state uncertainty, and stochastic dynamics, the agent will be unable to arrive at a particular state in a verifiable manner. It is therefore common to consider a goal achieved if the agent reaches a state within a small distance threshold to the goal. This approximation fails to explicitly account for the agent's state uncertainty. Point-based goals further do not accommodate goal uncertainty that arises when goals are estimated in a data-driven way. We argue that goal distributions are a more appropriate goal representation and present a novel approach to planning under uncertainty to goal distributions. We use the unscented transform to propagate state uncertainty under stochastic dynamics and use cross-entropy method to minimize the Kullback-Leibler divergence between the current state distribution and the goal distribution. We derive reductions of our cost function to commonly used goal-reaching costs such as weighted Euclidean distance, goal set indicators, chance-constrained goal sets, and maximum expectation of reaching a goal point. We explore different combinations of goal distributions, planner distributions, and divergence to illustrate behaviors achievable in our framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge