Place Recognition for Stereo VisualOdometry using LiDAR Descriptors

Paper and Code

Sep 18, 2019

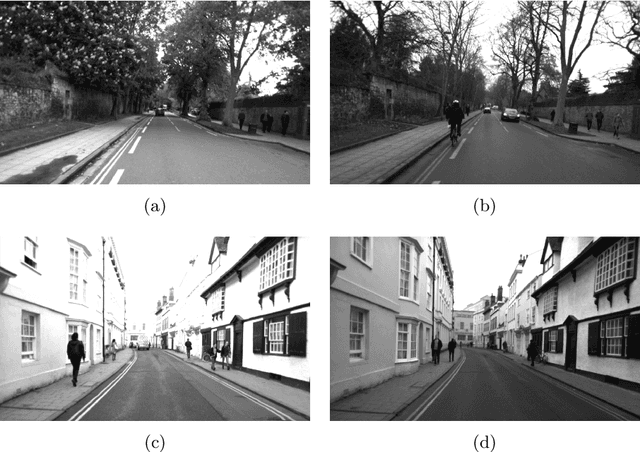

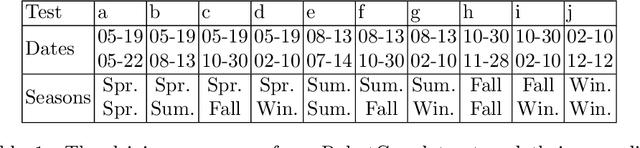

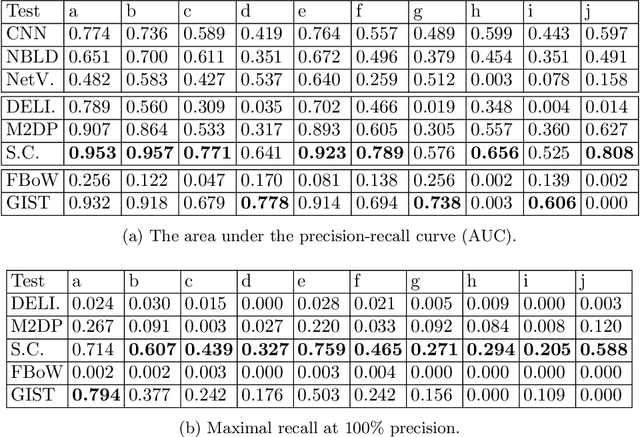

Place recognition is a core component in SLAM, and in most visual SLAM systems, it is based on the similarity between 2D images. However, the 3D points generated by visual odometry, and the structure information embedded within, are not exploited. In this paper, we adapt place recognition methods for 3D point clouds into stereo visual odometry. Stereo visual odometry generates 3D point clouds with a consistent scale. Thus, we are able to use global LiDAR descriptors for 3D point clouds to determine the similarity between places. 3D point clouds are more reliable than 2D visual cues (e.g., 2D features) against environmental changes such as varying illumination and can benefit visual SLAM systems in long-term deployment scenarios. Extensive evaluation on a public dataset (Oxford RobotCar) demonstrates the accuracy and efficiency of using 3D point clouds for place recognition over 2D methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge