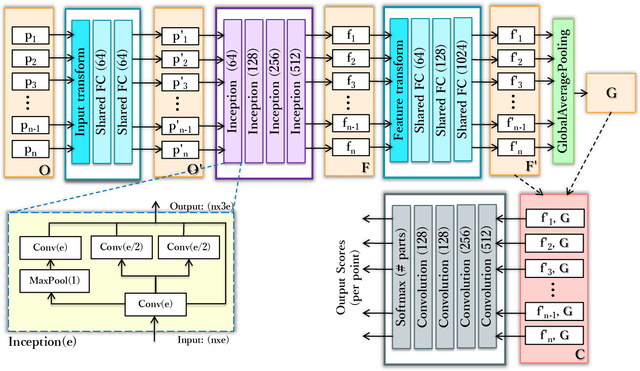

PIG-Net: Inception based Deep Learning Architecture for 3D Point Cloud Segmentation

Paper and Code

Jan 28, 2021

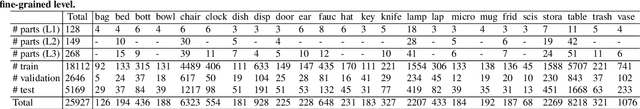

Point clouds, being the simple and compact representation of surface geometry of 3D objects, have gained increasing popularity with the evolution of deep learning networks for classification and segmentation tasks. Unlike human, teaching the machine to analyze the segments of an object is a challenging task and quite essential in various machine vision applications. In this paper, we address the problem of segmentation and labelling of the 3D point clouds by proposing a inception based deep network architecture called PIG-Net, that effectively characterizes the local and global geometric details of the point clouds. In PIG-Net, the local features are extracted from the transformed input points using the proposed inception layers and then aligned by feature transform. These local features are aggregated using the global average pooling layer to obtain the global features. Finally, feed the concatenated local and global features to the convolution layers for segmenting the 3D point clouds. We perform an exhaustive experimental analysis of the PIG-Net architecture on two state-of-the-art datasets, namely, ShapeNet [1] and PartNet [2]. We evaluate the effectiveness of our network by performing ablation study.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge