piCholesky: Polynomial Interpolation of Multiple Cholesky Factors for Efficient Approximate Cross-Validation

Paper and Code

Jun 10, 2015

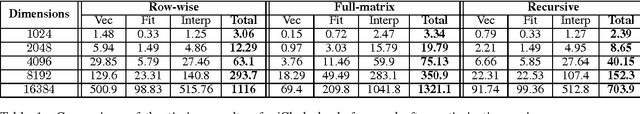

The dominant cost in solving least-square problems using Newton's method is often that of factorizing the Hessian matrix over multiple values of the regularization parameter ($\lambda$). We propose an efficient way to interpolate the Cholesky factors of the Hessian matrix computed over a small set of $\lambda$ values. This approximation enables us to optimally minimize the hold-out error while incurring only a fraction of the cost compared to exact cross-validation. We provide a formal error bound for our approximation scheme and present solutions to a set of key implementation challenges that allow our approach to maximally exploit the compute power of modern architectures. We present a thorough empirical analysis over multiple datasets to show the effectiveness of our approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge