Perturbative estimation of stochastic gradients

Paper and Code

Apr 08, 2019

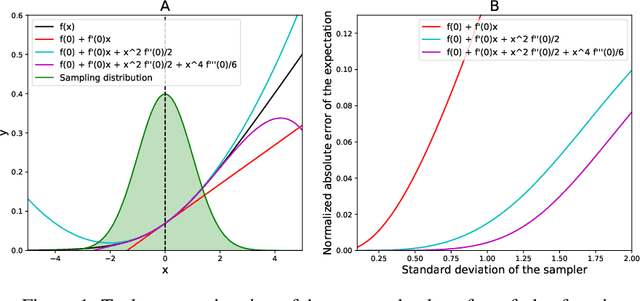

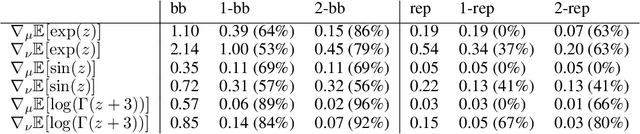

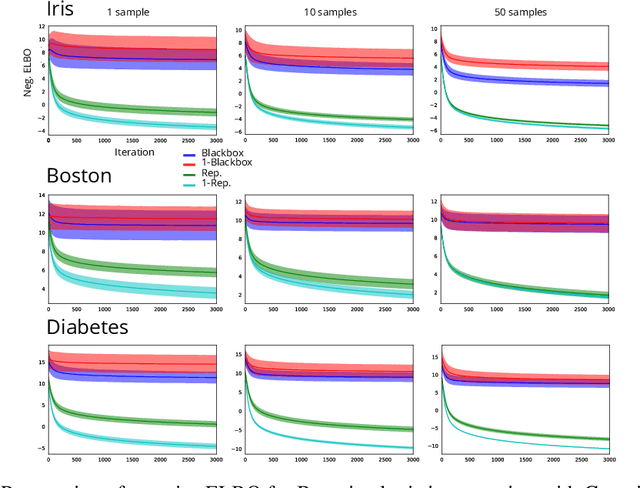

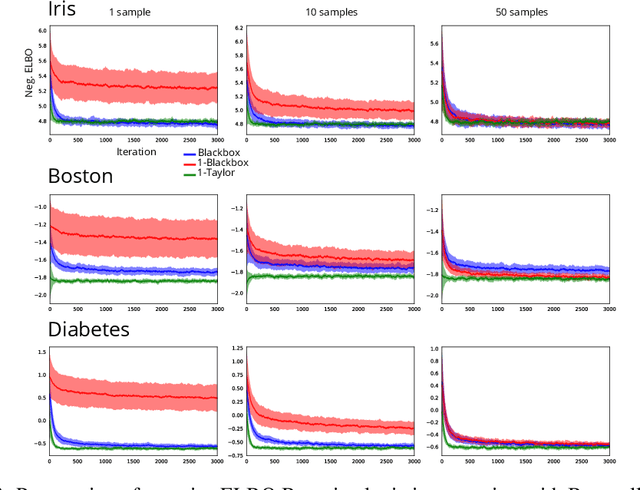

In this paper we introduce a family of stochastic gradient estimation techniques based of the perturbative expansion around the mean of the sampling distribution. We characterize the bias and variance of the resulting Taylor-corrected estimators using the Lagrange error formula. Furthermore, we introduce a family of variance reduction techniques that can be applied to other gradient estimators. Finally, we show that these new perturbative methods can be extended to discrete functions using analytic continuation. Using this technique, we derive a new gradient descent method for training stochastic networks with binary weights. In our experiments, we show that the perturbative correction improves the convergence of stochastic variational inference both in the continuous and in the discrete case.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge