Permuted AdaIN: Enhancing the Representation of Local Cues in Image Classifiers

Paper and Code

Oct 09, 2020

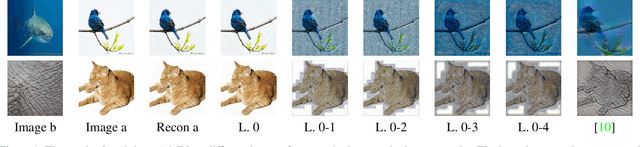

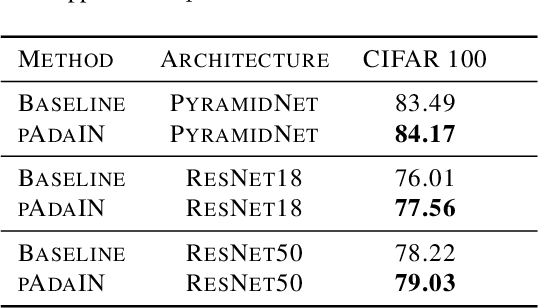

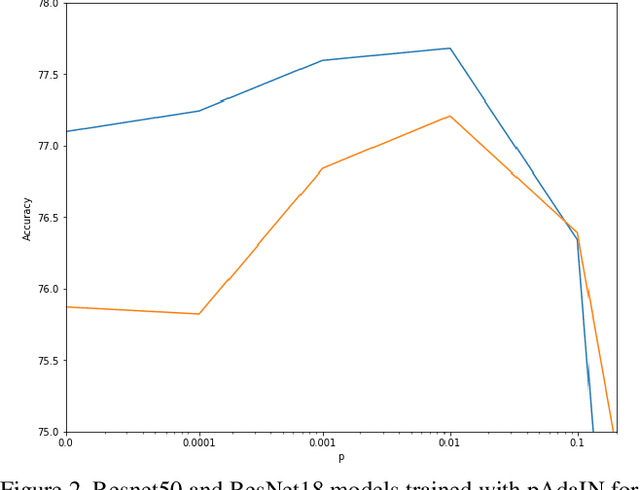

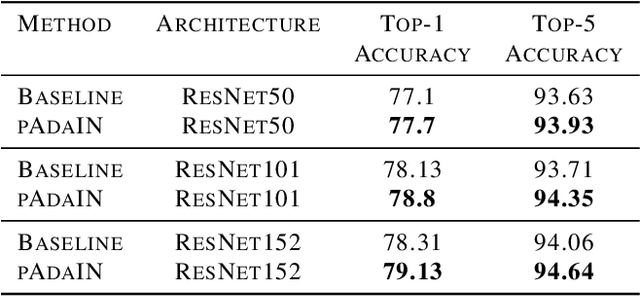

Recent work has shown that convolutional neural network classifiers overly rely on texture at the expense of shape cues, which adversely affects the classifier's performance in shifted domains. In this work, we make a similar but different distinction between local image cues, including shape and texture, and global image statistics. We provide a method that enhances the representation of local cues in the hidden layers of image classifiers. Our method, called Permuted Adaptive Instance Normalization (pAdaIN), samples a random permutation $\pi$ that rearranges the samples in a given batch. Adaptive Instance Normalization (AdaIN) is then applied between the activations of each (non-permuted) sample $i$ and the corresponding activations of the sample $\pi(i)$, thus swapping statistics between the samples of the batch. Since the global image statistics are distorted, this swapping procedure causes the network to rely on the local image cues. By choosing the random permutation with probability $p$ and the identity permutation otherwise, one can control the strength of this effect. With the correct choice of $p$, selected without considering the test data, our method consistently outperforms baseline methods in image classification, as well as in the setting of domain generalization.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge