Perception Over Time: Temporal Dynamics for Robust Image Understanding

Paper and Code

Mar 11, 2022

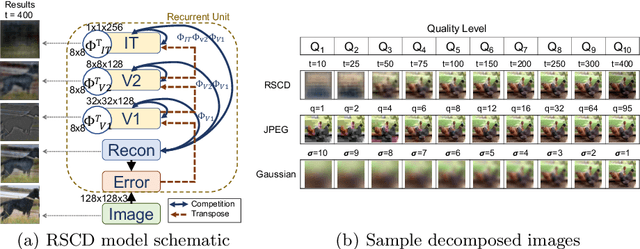

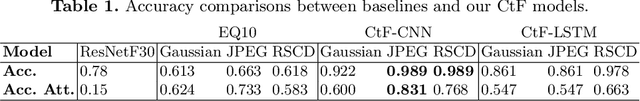

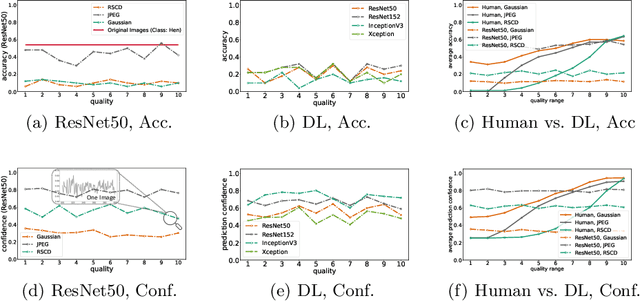

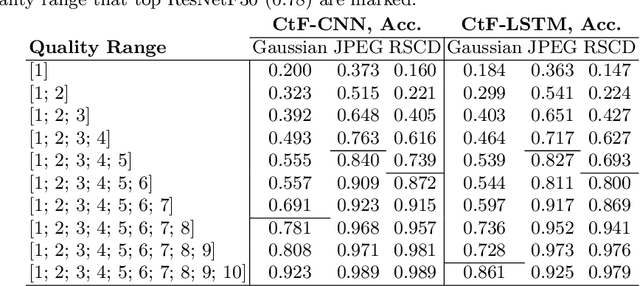

While deep learning surpasses human-level performance in narrow and specific vision tasks, it is fragile and over-confident in classification. For example, minor transformations in perspective, illumination, or object deformation in the image space can result in drastically different labeling, which is especially transparent via adversarial perturbations. On the other hand, human visual perception is orders of magnitude more robust to changes in the input stimulus. But unfortunately, we are far from fully understanding and integrating the underlying mechanisms that result in such robust perception. In this work, we introduce a novel method of incorporating temporal dynamics into static image understanding. We describe a neuro-inspired method that decomposes a single image into a series of coarse-to-fine images that simulates how biological vision integrates information over time. Next, we demonstrate how our novel visual perception framework can utilize this information "over time" using a biologically plausible algorithm with recurrent units, and as a result, significantly improving its accuracy and robustness over standard CNNs. We also compare our proposed approach with state-of-the-art models and explicitly quantify our adversarial robustness properties through multiple ablation studies. Our quantitative and qualitative results convincingly demonstrate exciting and transformative improvements over the standard computer vision and deep learning architectures used today.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge