Path Development Network with Finite-dimensional Lie Group Representation

Paper and Code

Apr 02, 2022

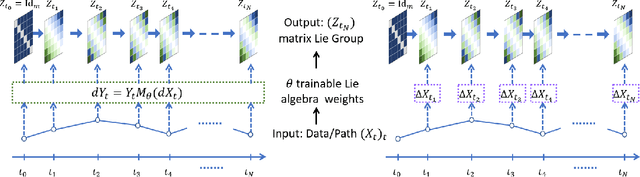

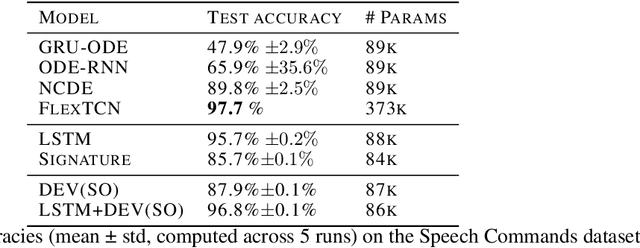

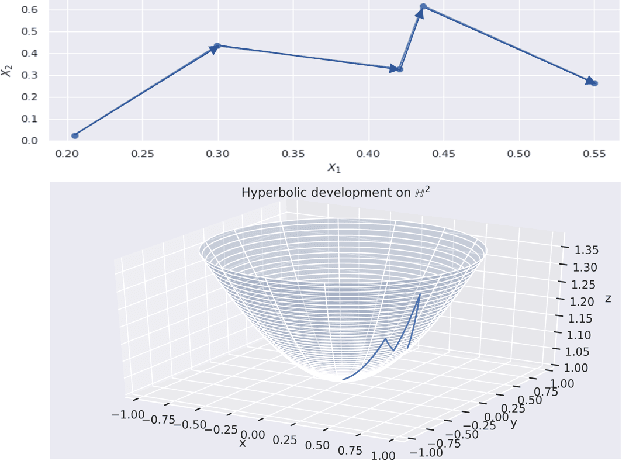

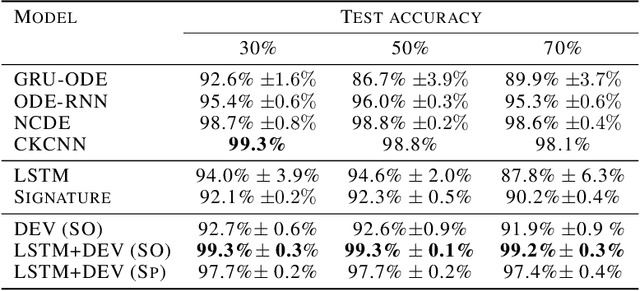

The path signature, a mathematically principled and universal feature of sequential data, leads to a performance boost of deep learning-based models in various sequential data tasks as a complimentary feature. However, it suffers from the curse of dimensionality when the path dimension is high. To tackle this problem, we propose a novel, trainable path development layer, which exploits representations of sequential data with the help of finite-dimensional matrix Lie groups. We also design the backpropagation algorithm of the development layer via an optimisation method on manifolds known as trivialisation. Numerical experiments demonstrate that the path development consistently and significantly outperforms, in terms of accuracy and dimensionality, signature features on several empirical datasets. Moreover, stacking the LSTM with the development layer with a suitable matrix Lie group is empirically proven to alleviate the gradient issues of LSTMs and the resulting hybrid model achieves the state-of-the-art performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge