Parameter Database : Data-centric Synchronization for Scalable Machine Learning

Paper and Code

Aug 04, 2015

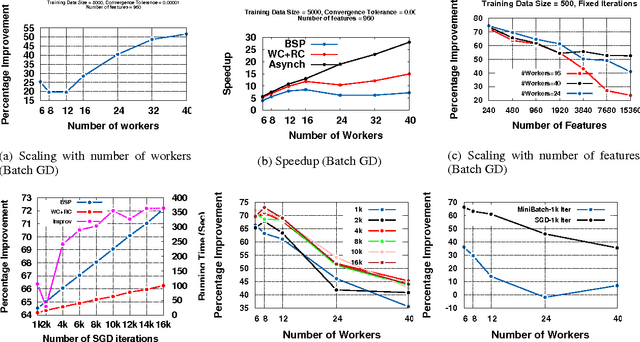

We propose a new data-centric synchronization framework for carrying out of machine learning (ML) tasks in a distributed environment. Our framework exploits the iterative nature of ML algorithms and relaxes the application agnostic bulk synchronization parallel (BSP) paradigm that has previously been used for distributed machine learning. Data-centric synchronization complements function-centric synchronization based on using stale updates to increase the throughput of distributed ML computations. Experiments to validate our framework suggest that we can attain substantial improvement over BSP while guaranteeing sequential correctness of ML tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge