Panopticus: Omnidirectional 3D Object Detection on Resource-constrained Edge Devices

Paper and Code

Oct 02, 2024

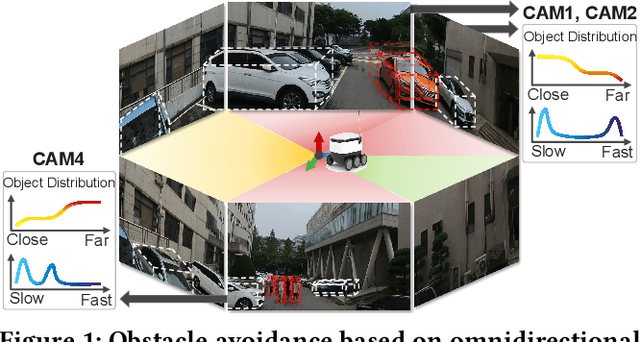

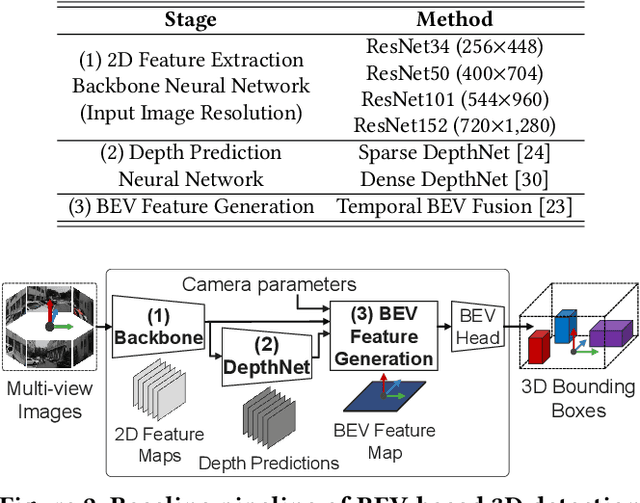

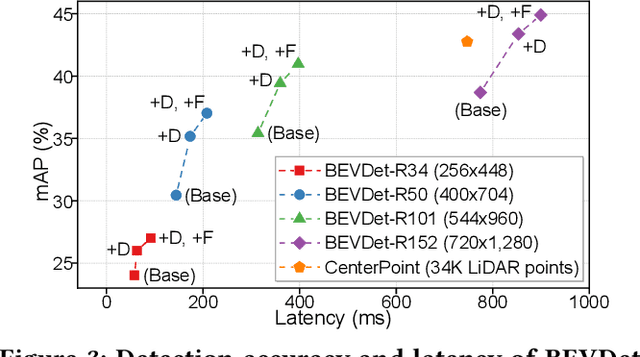

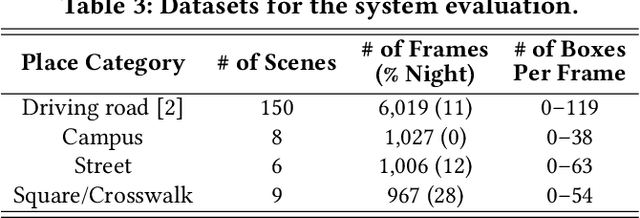

3D object detection with omnidirectional views enables safety-critical applications such as mobile robot navigation. Such applications increasingly operate on resource-constrained edge devices, facilitating reliable processing without privacy concerns or network delays. To enable cost-effective deployment, cameras have been widely adopted as a low-cost alternative to LiDAR sensors. However, the compute-intensive workload to achieve high performance of camera-based solutions remains challenging due to the computational limitations of edge devices. In this paper, we present Panopticus, a carefully designed system for omnidirectional and camera-based 3D detection on edge devices. Panopticus employs an adaptive multi-branch detection scheme that accounts for spatial complexities. To optimize the accuracy within latency limits, Panopticus dynamically adjusts the model's architecture and operations based on available edge resources and spatial characteristics. We implemented Panopticus on three edge devices and conducted experiments across real-world environments based on the public self-driving dataset and our mobile 360{\deg} camera dataset. Experiment results showed that Panopticus improves accuracy by 62% on average given the strict latency objective of 33ms. Also, Panopticus achieves a 2.1{\times} latency reduction on average compared to baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge