Overcoming Obstructions via Bandwidth-Limited Multi-Agent Spatial Handshaking

Paper and Code

Jul 01, 2021

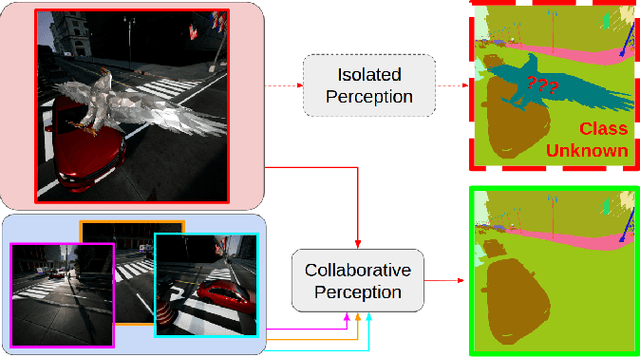

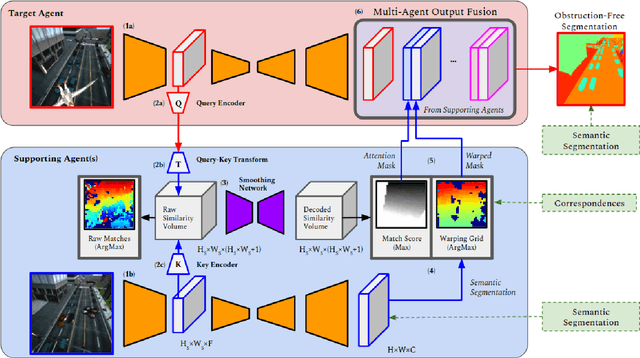

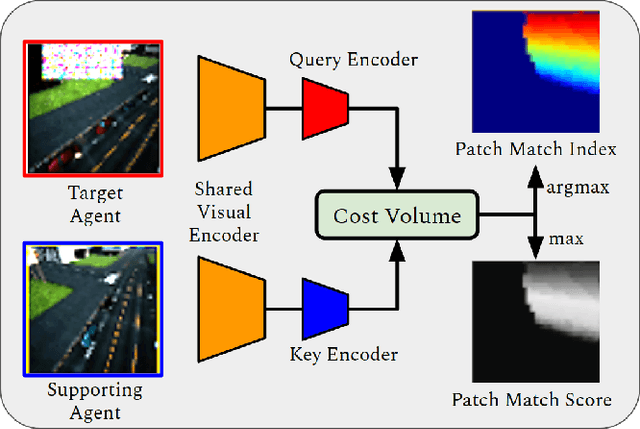

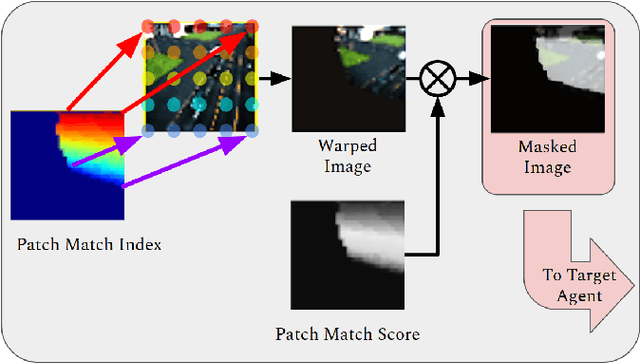

In this paper, we address bandwidth-limited and obstruction-prone collaborative perception, specifically in the context of multi-agent semantic segmentation. This setting presents several key challenges, including processing and exchanging unregistered robotic swarm imagery. To be successful, solutions must effectively leverage multiple non-static and intermittently-overlapping RGB perspectives, while heeding bandwidth constraints and overcoming unwanted foreground obstructions. As such, we propose an end-to-end learn-able Multi-Agent Spatial Handshaking network (MASH) to process, compress, and propagate visual information across a robotic swarm. Our distributed communication module operates directly (and exclusively) on raw image data, without additional input requirements such as pose, depth, or warping data. We demonstrate superior performance of our model compared against several baselines in a photo-realistic multi-robot AirSim environment, especially in the presence of image occlusions. Our method achieves an absolute 11% IoU improvement over strong baselines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge