Overcoming Catastrophic Forgetting by Generative Regularization

Paper and Code

Dec 03, 2019

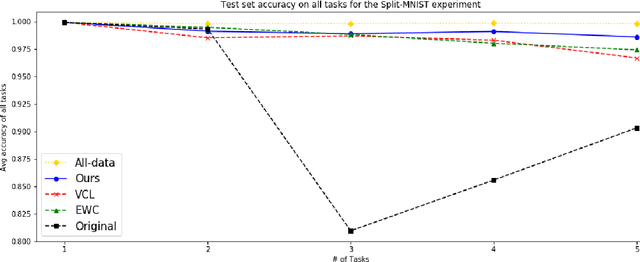

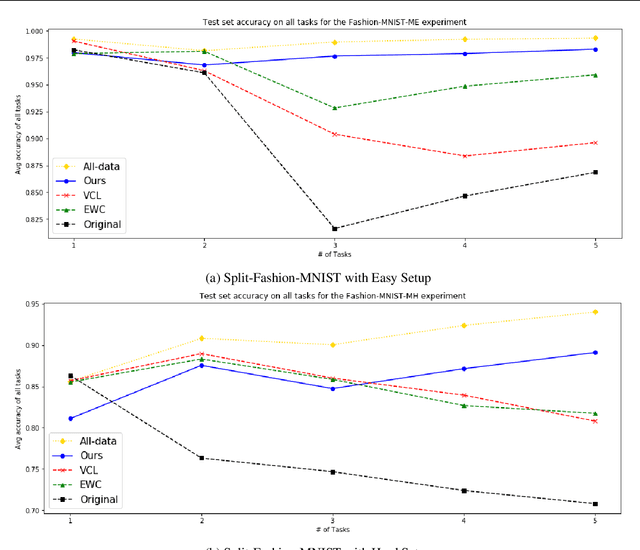

In this paper, we propose a new method to overcome catastrophic forgetting by adding generative regularization to Bayesian inference framework. We could construct generative regularization term for all given models by leveraging Energy-based models and Langevin-Dynamic sampling. By combining discriminative and generative loss together, we show that this intuitively provides a better posterior formulation in Bayesian inference. Experimental results show that the proposed method outperforms state of-the-art methods on a variety of tasks, avoiding catastrophic forgetting in continual learning. In particular, the proposed method outperforms previous methos over 10$\%$ in Fashion-MNIST dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge