Oriented Object Detection in Aerial Images Based on Area Ratio of Parallelogram

Paper and Code

Oct 14, 2021

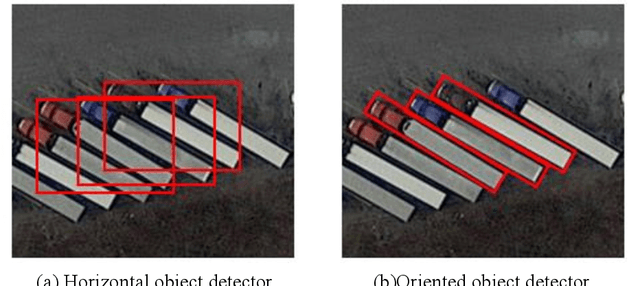

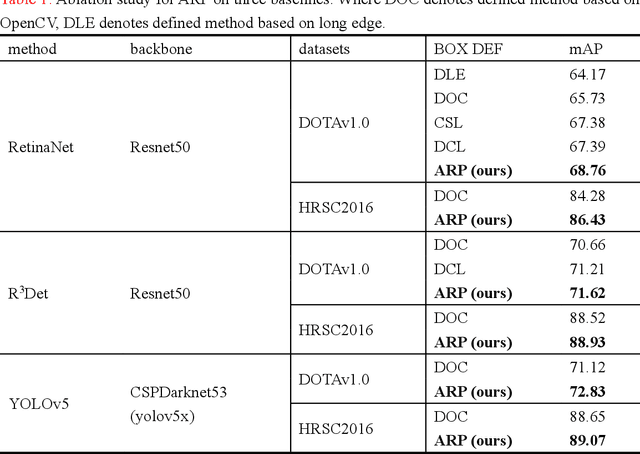

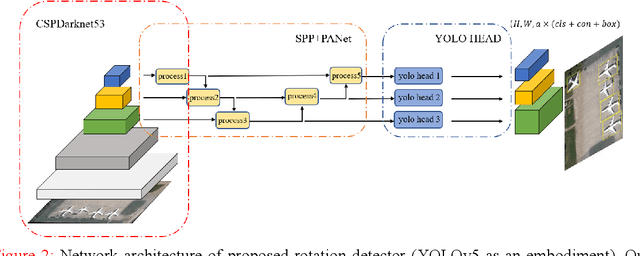

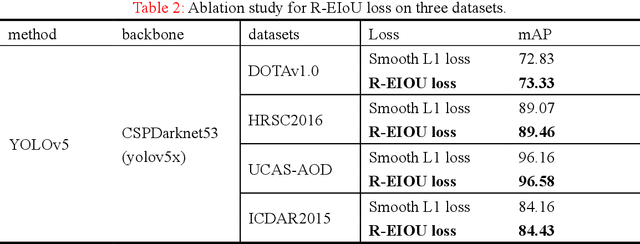

Rotated object detection is a challenging task in aerial images since the objects in aerial images are displayed in arbitrary directions and are frequently densely packed. Although considerable progress has been made, there are still challenges that existing regression-based rotation detectors suffer from the representation ambiguity. In this paper, we propose a simple, practical framework to optimize the bounding box regression for rotating objects. Rather than directly regressing the five parameters (coordinates of the central point, width, height, and rotation angle) or the four vertices, we employ the area ratio of the parallelogram (ARP) to describe a multi-oriented object accurately. Specifically, ARP regresses coordinates of the center point, height, and width of the oriented object's minimum circumscribed rectangle and three area ratios. It may facilitate learning offset and avoid the issue of angular periodicity or label points sequence for oriented objects. To further remedy the confusion issue of nearly horizontal objects, the area ratio between the object and its minimal circumscribed rectangle has been used to guide the selection of horizontal or oriented detection for each object. The rotation efficient IOU loss (R-EIOU) connects the flat bounding box with the three area ratios and improves the accuracy of the rotating bounding. Experimental results on remote sensing datasets, including HRSC2016, DOTA, and UCAS-AOD, show that our method achieves superior detection performance than many state-of-the-art approaches. The code and model will be coming with the paper published.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge