Optimizing Random Mixup with Gaussian Differential Privacy

Paper and Code

Feb 14, 2022

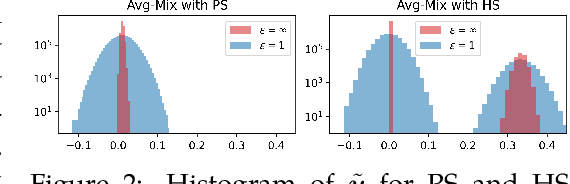

Differentially private data release receives rising attention in machine learning community. Recently, an algorithm called DPMix is proposed to release high-dimensional data after a random mixup of degree $m$ with differential privacy. However, limited theoretical justifications are given about the "sweet spot $m$" phenomenon, and directly applying DPMix to image data suffers from severe loss of utility. In this paper, we revisit random mixup with recent progress on differential privacy. In theory, equipped with Gaussian Differential Privacy with Poisson subsampling, a tight closed form analysis is presented that enables a quantitative characterization of optimal mixup $m^*$ based on linear regression models. In practice, mixup of features, extracted by handcraft or pre-trained neural networks such as self-supervised learning without labels, is adopted to significantly boost the performance with privacy protection. We name it as Differentially Private Feature Mixup (DPFMix). Experiments on MNIST, CIFAR10/100 are conducted to demonstrate its remarkable utility improvement and protection against attacks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge