Optimization algorithms inspired by the geometry of dissipative systems

Paper and Code

Dec 06, 2019

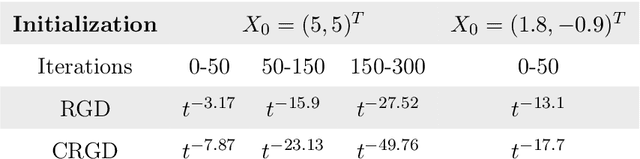

Accelerated gradient methods are a powerful optimization tool in machine learning and statistics but their development has traditionally been driven by heuristic motivations. Recent research, however, has demonstrated that these methods can be derived as discretizations of dynamical systems, which in turn has provided a basis for more systematic investigations, especially into the structure of those dynamical systems and their structure preserving discretizations. In this work we introduce dynamical systems defined through a contact geometry which are not only naturally suited to the optimization goal but also subsume all previous methods based on geometric dynamical systems. These contact dynamical systems also admit a natural, robust discretization through geometric contact integrators. We demonstrate these features in paradigmatic examples which show that we can indeed obtain optimization algorithms that achieve oracle lower bounds on convergence rates while also improving on previous proposals in terms of stability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge