Optimised Convolutional Neural Networks for Heart Rate Estimation and Human Activity Recognition in Wrist Worn Sensing Applications

Paper and Code

Mar 30, 2020

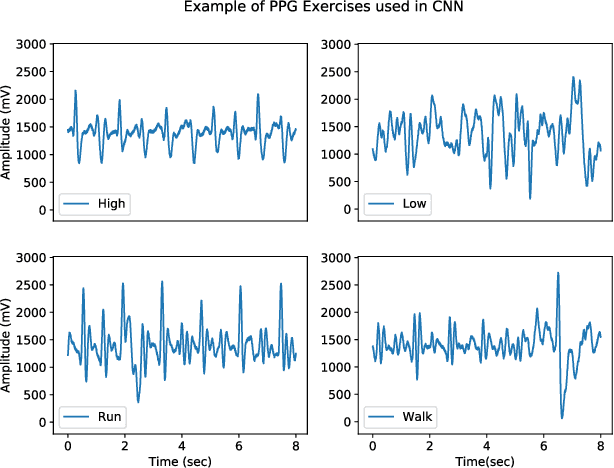

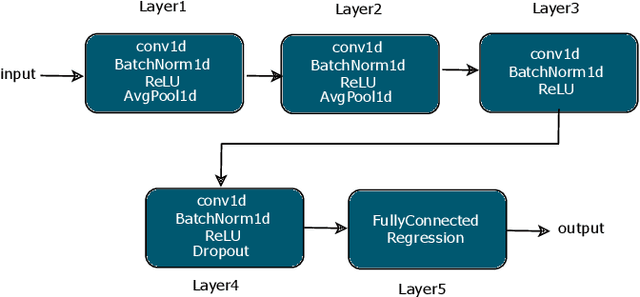

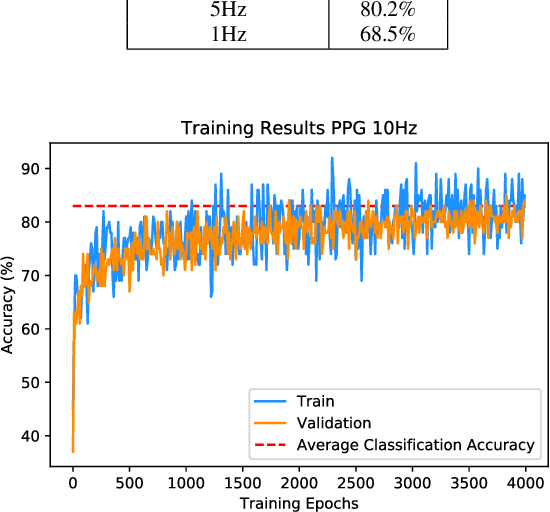

Wrist-worn smart devices are providing increased insights into human health, behaviour and performance through sophisticated analytics. However, battery life, device cost and sensor performance in the face of movement-related artefact present challenges which must be further addressed to see effective applications and wider adoption through commoditisation of the technology. We address these challenges by demonstrating, through using a simple optical measurement, photoplethysmography (PPG) used conventionally for heart rate detection in wrist-worn sensors, that we can provide improved heart rate and human activity recognition (HAR) simultaneously at low sample rates, without an inertial measurement unit. This simplifies hardware design and reduces costs and power budgets. We apply two deep learning pipelines, one for human activity recognition and one for heart rate estimation. HAR is achieved through the application of a visual classification approach, capable of robust performance at low sample rates. Here, transfer learning is leveraged to retrain a convolutional neural network (CNN) to distinguish characteristics of the PPG during different human activities. For heart rate estimation we use a CNN adopted for regression which maps noisy optical signals to heart rate estimates. In both cases, comparisons are made with leading conventional approaches. Our results demonstrate a low sampling frequency can achieve good performance without significant degradation of accuracy. 5 Hz and 10 Hz were shown to have 80.2% and 83.0% classification accuracy for HAR respectively. These same sampling frequencies also yielded a robust heart rate estimation which was comparative with that achieved at the more energy-intensive rate of 256 Hz.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge