Optimal Transport Based Generative Autoencoders

Paper and Code

Oct 16, 2019

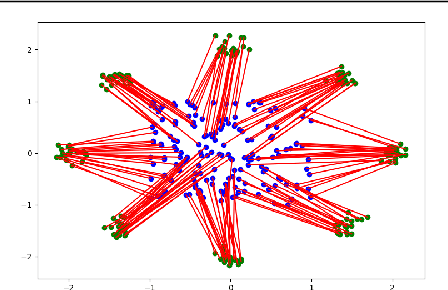

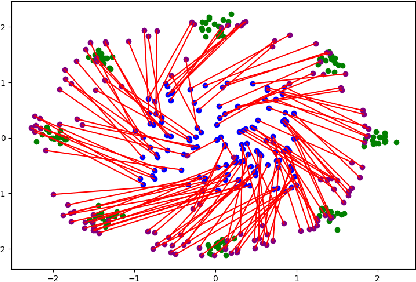

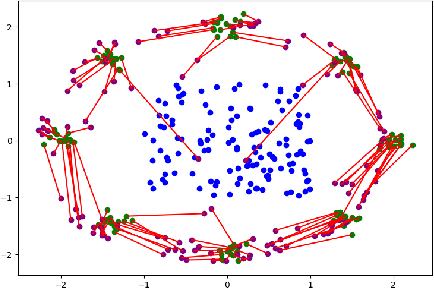

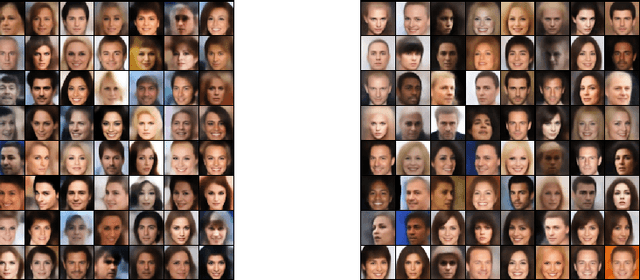

The field of deep generative modeling is dominated by generative adversarial networks (GANs). However, the training of GANs often lacks stability, fails to converge, and suffers from model collapse. It takes an assortment of tricks to solve these problems, which may be difficult to understand for those seeking to apply generative modeling. Instead, we propose two novel generative autoencoders, AE-OTtrans and AE-OTgen, which rely on optimal transport instead of adversarial training. AE-OTtrans and AEOTgen, unlike VAE and WAE, preserve the manifold of the data; they do not force the latent distribution to match a normal distribution, resulting in greater quality images. AEOTtrans and AE-OTgen also produce images of higher diversity compared to their predecessor, AE-OT. We show that AE-OTtrans and AE-OTgen surpass GANs in the MNIST and FashionMNIST datasets. Furthermore, We show that AE-OTtrans and AE-OTgen do state of the art on the MNIST, FashionMNIST, and CelebA image sets comapred to other non-adversarial generative models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge