Optimal Dispatch in Emergency Service System via Reinforcement Learning

Paper and Code

Oct 15, 2020

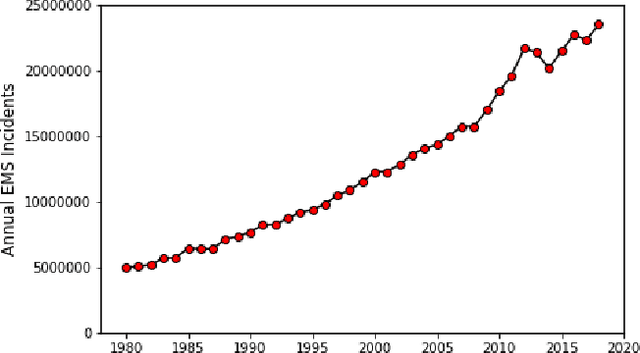

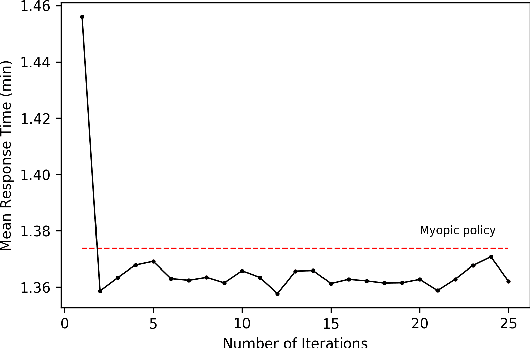

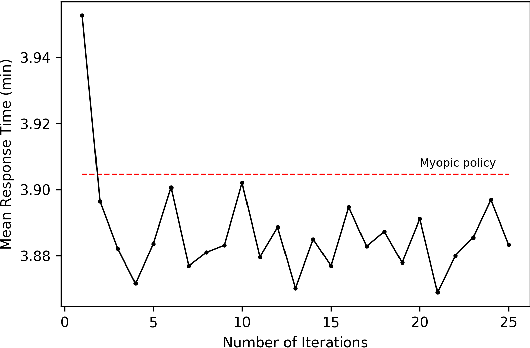

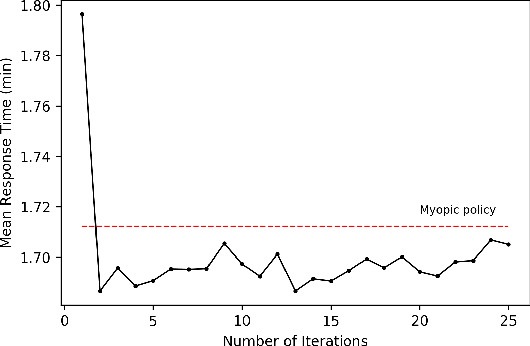

In the United States, medical responses by fire departments over the last four decades increased by 367%. This had made it critical to decision makers in emergency response departments that existing resources are efficiently used. In this paper, we model the ambulance dispatch problem as an average-cost Markov decision process and present a policy iteration approach to find an optimal dispatch policy. We then propose an alternative formulation using post-decision states that is shown to be mathematically equivalent to the original model, but with a much smaller state space. We present a temporal difference learning approach to the dispatch problem based on the post-decision states. In our numerical experiments, we show that our obtained temporal-difference policy outperforms the benchmark myopic policy. Our findings suggest that emergency response departments can improve their performance with minimal to no cost.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge