Optimal Continuous State POMDP Planning with Semantic Observations: A Variational Approach

Paper and Code

Jul 22, 2018

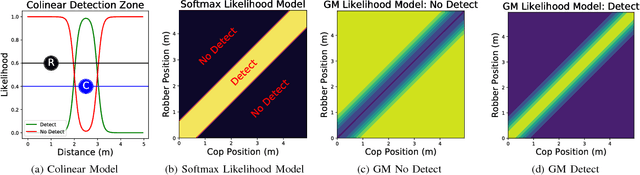

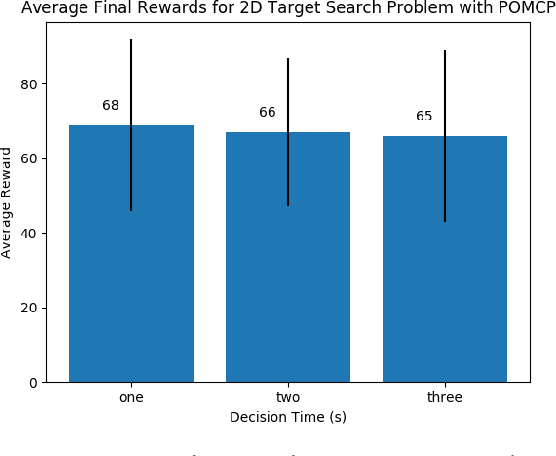

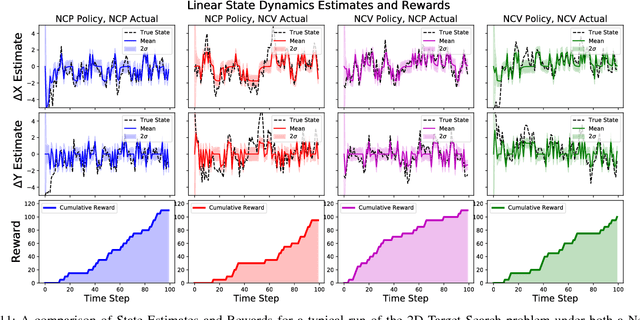

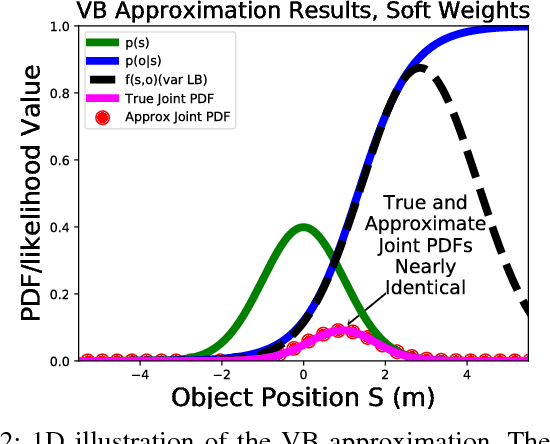

This work develops novel strategies for optimal planning with semantic observations using continuous state Partially Observable Markov Decision Processes (CPOMDPs). Two major innovations are presented in relation to Gaussian mixture (GM) CPOMDP policy approximation methods. While existing methods have many theoretically nice properties, they are hampered by the inability to efficiently represent and reason over hybrid continuous-discrete probabilistic models. The first major innovation is the derivation of closed-form variational Bayes GM approximations of Point-Based Value Iteration Bellman policy backups, using softmax models of continuous-discrete semantic observation probabilities. A key benefit of this approach is that dynamic decision-making tasks can be performed with complex non-Gaussian uncertainties, while also exploiting continuous dynamic state space models (thus avoiding cumbersome and costly discretization). The second major innovation is a new clustering-based technique for mixture condensation that scales well to very large GM policy functions and belief functions. Simulation results for a target search and interception task with semantic observations show that the GM policies resulting from these innovations are more effective than those produced by other state of the art GM and Monte Carlo based policy approximations, but require significantly less modeling overhead and runtime cost. Additional results demonstrate the robustness of this approach to model errors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge