Optimal Algorithms for Differentially Private Stochastic Monotone Variational Inequalities and Saddle-Point Problems

Paper and Code

Apr 07, 2021

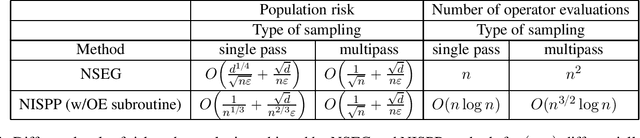

In this work, we conduct the first systematic study of stochastic variational inequality (SVI) and stochastic saddle point (SSP) problems under the constraint of differential privacy-(DP). We propose two algorithms: Noisy Stochastic Extragradient (NSEG) and Noisy Inexact Stochastic Proximal Point (NISPP). We show that sampling with replacement variants of these algorithms attain the optimal risk for DP-SVI and DP-SSP. Key to our analysis is the investigation of algorithmic stability bounds, both of which are new even in the nonprivate case, together with a novel "stability implies generalization" result for the gap functions for SVI and SSP problems. The dependence of the running time of these algorithms, with respect to the dataset size $n$, is $n^2$ for NSEG and $\widetilde{O}(n^{3/2})$ for NISPP.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge