OpEvo: An Evolutionary Method for Tensor Operator Optimization

Paper and Code

Jun 10, 2020

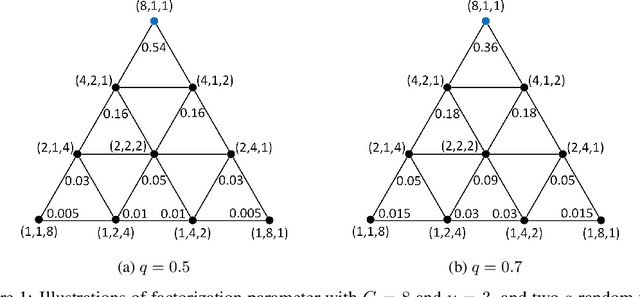

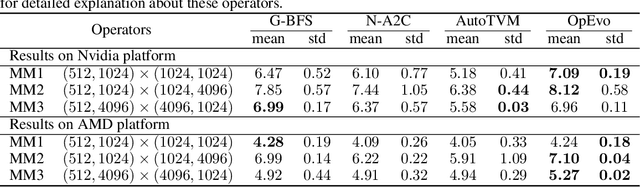

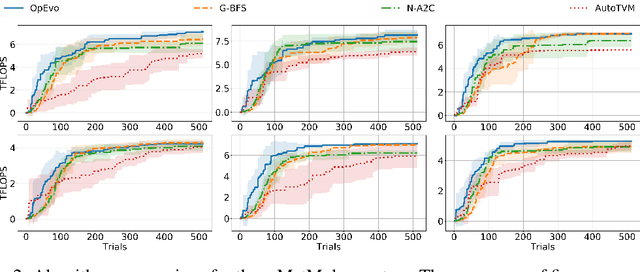

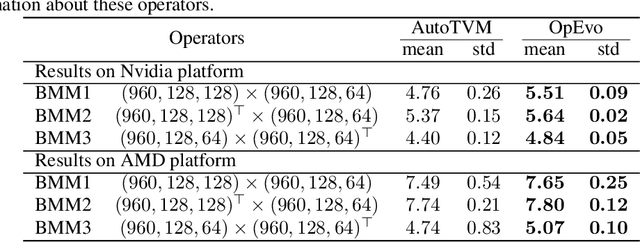

Training and inference efficiency of deep neural networks highly rely on the performance of tensor operators on hardware platforms. Manually optimized tensor operators have limitations in terms of supporting new operators or supporting new hardware platforms. Therefore, automatically optimizing device code configurations of tensor operators is getting increasingly attractive. However, current methods for tensor operator optimization usually suffer from poor sample-efficiency due to the combinatorial search space. In this work, we propose a novel evolutionary method, OpEvo, which efficiently explores the search spaces of tensor operators by introducing a topology-aware mutation operation based on q-random walk distribution to leverage the topological structures over the search spaces. Our comprehensive experiment results show that OpEvo can find the best configuration with the least number of trials and the lowest variance compared with state-of-the-art methods. All code of this work is available online.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge