Online Tool Selection with Learned Grasp Prediction Models

Paper and Code

Feb 15, 2023

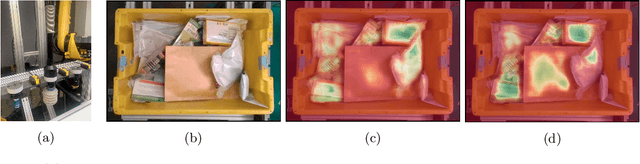

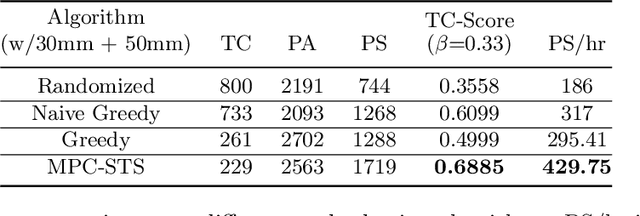

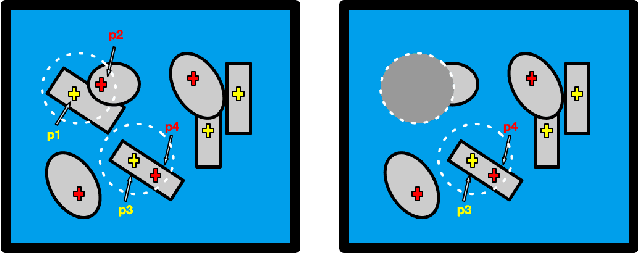

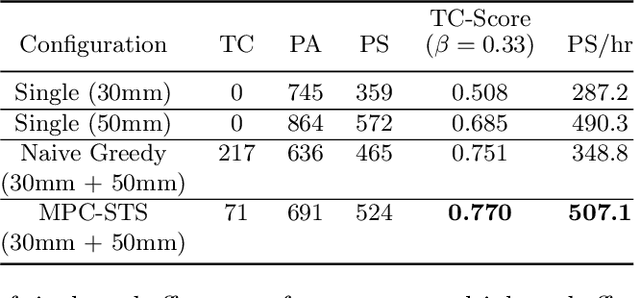

Deep learning-based grasp prediction models have become an industry standard for robotic bin-picking systems. To maximize pick success, production environments are often equipped with several end-effector tools that can be swapped on-the-fly, based on the target object. Tool-change, however, takes time. Choosing the order of grasps to perform, and corresponding tool-change actions, can improve system throughput; this is the topic of our work. The main challenge in planning tool change is uncertainty - we typically cannot see objects in the bin that are currently occluded. Inspired by queuing and admission control problems, we model the problem as a Markov Decision Process (MDP), where the goal is to maximize expected throughput, and we pursue an approximate solution based on model predictive control, where at each time step we plan based only on the currently visible objects. Special to our method is the idea of void zones, which are geometrical boundaries in which an unknown object will be present, and therefore cannot be accounted for during planning. Our planning problem can be solved using integer linear programming (ILP). However, we find that an approximate solution based on sparse tree search yields near optimal performance at a fraction of the time. Another question that we explore is how to measure the performance of tool-change planning: we find that throughput alone can fail to capture delicate and smooth behavior, and propose a principled alternative. Finally, we demonstrate our algorithms on both synthetic and real world bin picking tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge