Online Test-time Adaptation for 3D Human Pose Estimation: A Practical Perspective with Estimated 2D Poses

Paper and Code

Mar 14, 2025

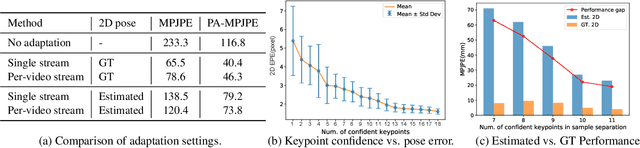

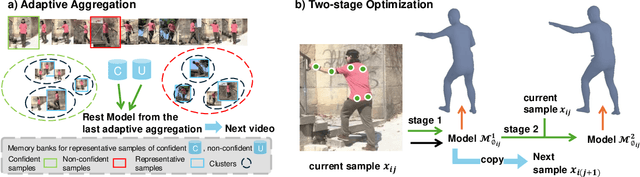

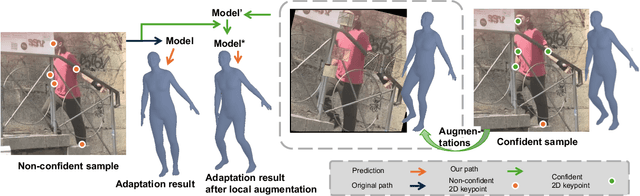

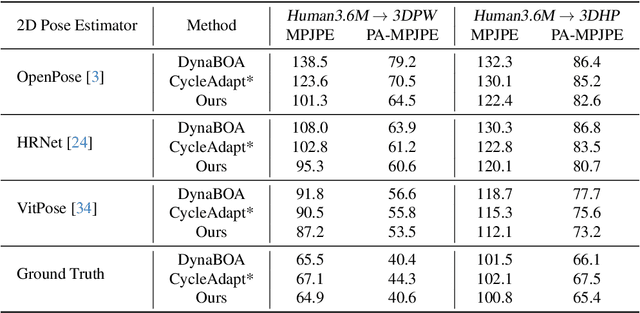

Online test-time adaptation for 3D human pose estimation is used for video streams that differ from training data. Ground truth 2D poses are used for adaptation, but only estimated 2D poses are available in practice. This paper addresses adapting models to streaming videos with estimated 2D poses. Comparing adaptations reveals the challenge of limiting estimation errors while preserving accurate pose information. To this end, we propose adaptive aggregation, a two-stage optimization, and local augmentation for handling varying levels of estimated pose error. First, we perform adaptive aggregation across videos to initialize the model state with labeled representative samples. Within each video, we use a two-stage optimization to benefit from 2D fitting while minimizing the impact of erroneous updates. Second, we employ local augmentation, using adjacent confident samples to update the model before adapting to the current non-confident sample. Our method surpasses state-of-the-art by a large margin, advancing adaptation towards more practical settings of using estimated 2D poses.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge