Online Multimodal Transportation Planning using Deep Reinforcement Learning

Paper and Code

May 18, 2021

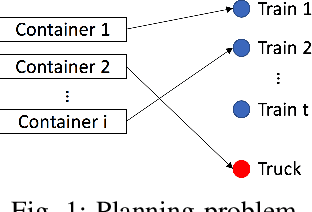

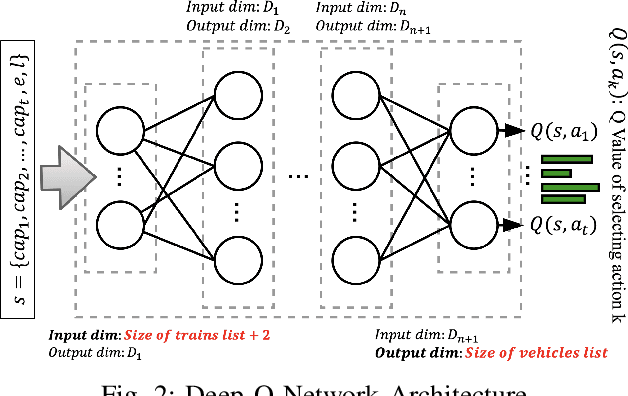

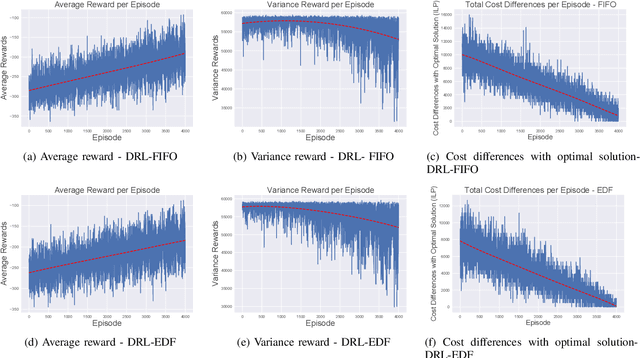

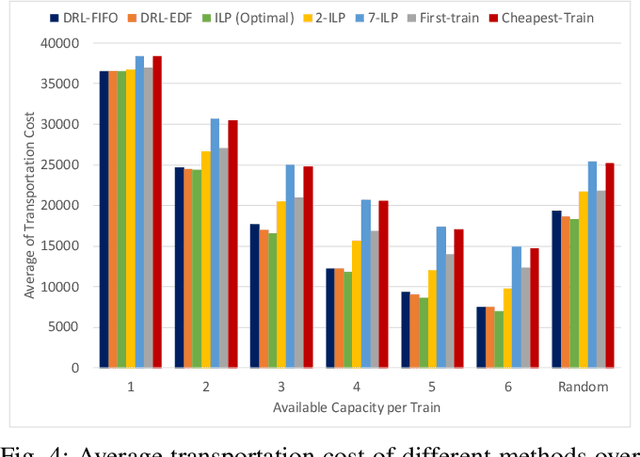

In this paper we propose a Deep Reinforcement Learning approach to solve a multimodal transportation planning problem, in which containers must be assigned to a truck or to trains that will transport them to their destination. While traditional planning methods work "offline" (i.e., they take decisions for a batch of containers before the transportation starts), the proposed approach is "online", in that it can take decisions for individual containers, while transportation is being executed. Planning transportation online helps to effectively respond to unforeseen events that may affect the original transportation plan, thus supporting companies in lowering transportation costs. We implemented different container selection heuristics within the proposed Deep Reinforcement Learning algorithm and we evaluated its performance for each heuristic using data that simulate a realistic scenario, designed on the basis of a real case study at a logistics company. The experimental results revealed that the proposed method was able to learn effective patterns of container assignment. It outperformed tested competitors in terms of total transportation costs and utilization of train capacity by 20.48% to 55.32% for the cost and by 7.51% to 20.54% for the capacity. Furthermore, it obtained results within 2.7% for the cost and 0.72% for the capacity of the optimal solution generated by an Integer Linear Programming solver in an offline setting.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge