Online Multi-agent Reinforcement Learning for Decentralized Inverter-based Volt-VAR Control

Paper and Code

Jun 23, 2020

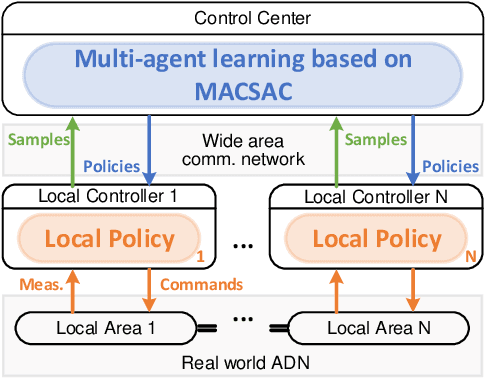

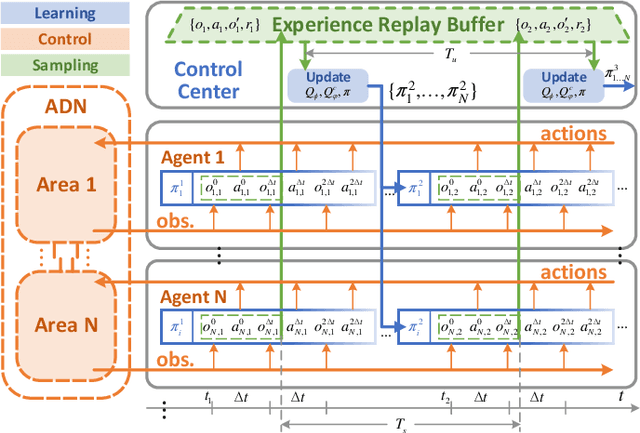

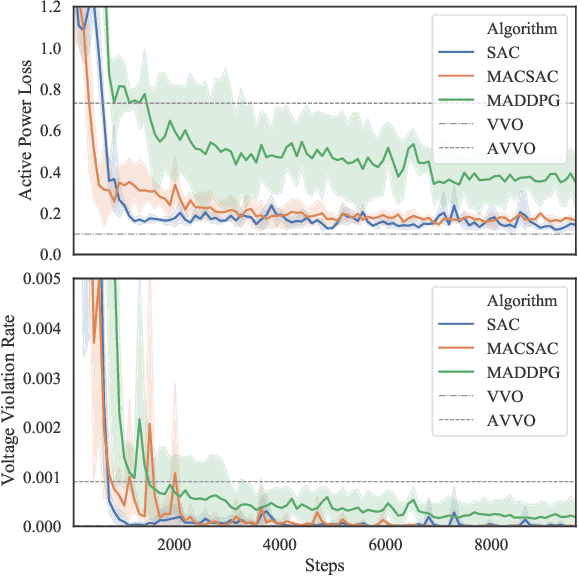

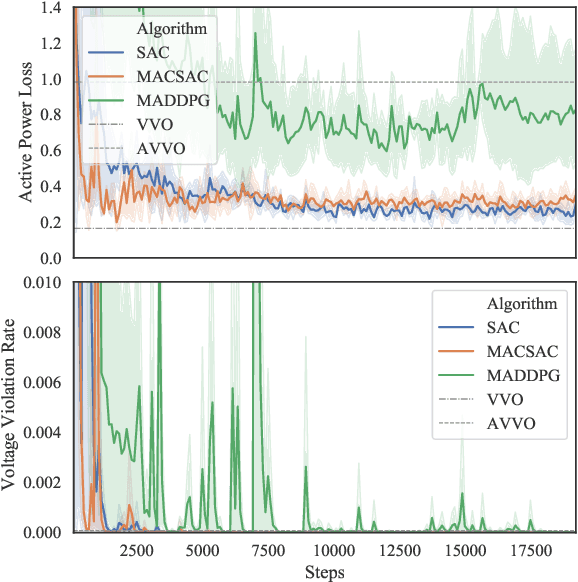

The distributed Volt/Var control (VVC) methods have been widely studied for active distribution networks(ADNs), which is based on perfect model and real-time P2P communication. However, the model is always incomplete with significant parameter errors and such P2P communication system is hard to maintain. In this paper, we propose an online multi-agent reinforcement learning and decentralized control framework (OLDC) for VVC. In this framework, the VVC problem is formulated as a constrained Markov game and we propose a novel multi-agent constrained soft actor-critic (MACSAC) reinforcement learning algorithm. MACSAC is used to train the control agents online, so the accurate ADN model is no longer needed. Then, the trained agents can realize decentralized optimal control using local measurements without real-time P2P communication. The OLDC with MACSAC has shown extraordinary flexibility, efficiency and robustness to various computing and communication conditions. Numerical simulations on IEEE test cases not only demonstrate that the proposed MACSAC outperforms the state-of-art learning algorithms, but also support the superiority of our OLDC framework in the online application.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge