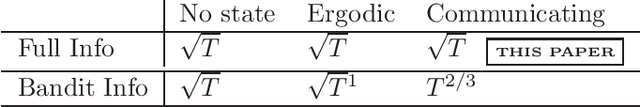

Online Learning in Adversarial MDPs: Is the Communicating Case Harder than Ergodic?

Paper and Code

Nov 03, 2021

We study online learning in adversarial communicating Markov Decision Processes with full information. We give an algorithm that achieves a regret of $O(\sqrt{T})$ with respect to the best fixed deterministic policy in hindsight when the transitions are deterministic. We also prove a regret lower bound in this setting which is tight up to polynomial factors in the MDP parameters. We also give an inefficient algorithm that achieves $O(\sqrt{T})$ regret in communicating MDPs (with an additional mild restriction on the transition dynamics).

* 15 pages

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge