Online Extrinsic Camera Calibration for Temporally Consistent IPM Using Lane Boundary Observations with a Lane Width Prior

Paper and Code

Aug 09, 2020

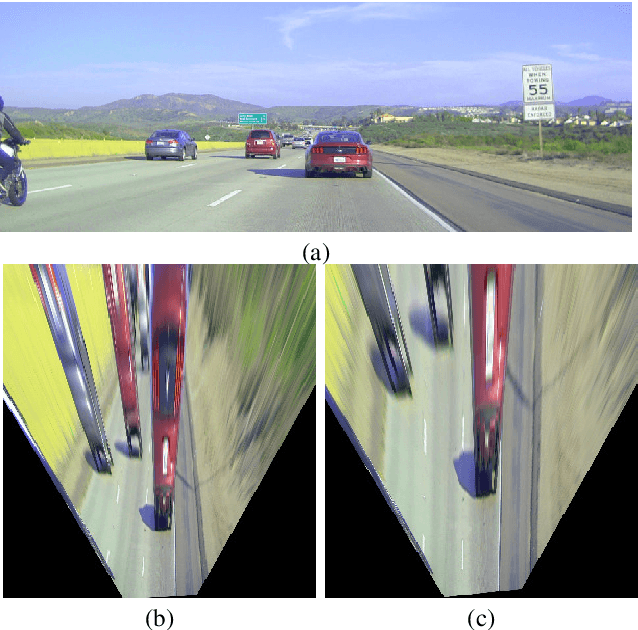

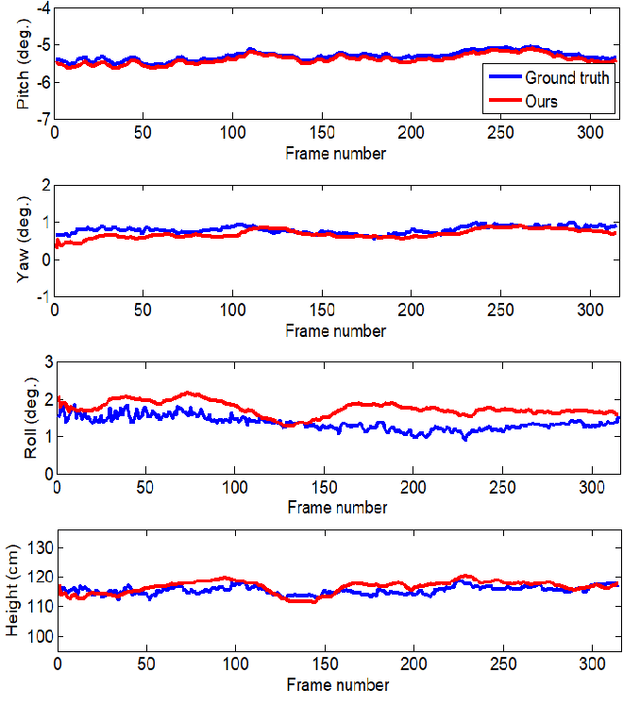

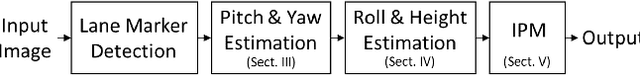

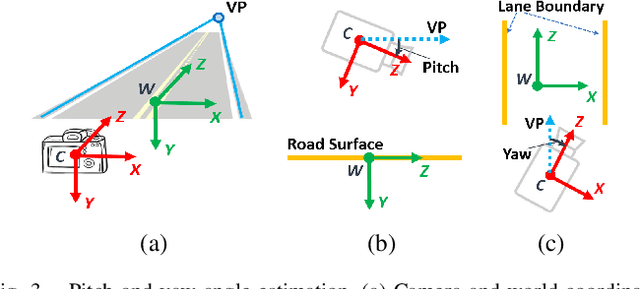

In this paper, we propose a method for online extrinsic camera calibration, i.e., estimating pitch, yaw, roll angles and camera height from road surface in sequential driving scene images. The proposed method estimates the extrinsic camera parameters in two steps: 1) pitch and yaw angles are estimated simultaneously using a vanishing point computed from a set of lane boundary observations, and then 2) roll angle and camera height are computed by minimizing difference between lane width observations and a lane width prior. The extrinsic camera parameters are sequentially updated using extended Kalman filtering (EKF) and are finally used to generate a temporally consistent bird-eye-view (BEV) image by inverse perspective mapping (IPM). We demonstrate the superiority of the proposed method in synthetic and real-world datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge