Online and Distribution-Free Robustness: Regression and Contextual Bandits with Huber Contamination

Paper and Code

Oct 08, 2020

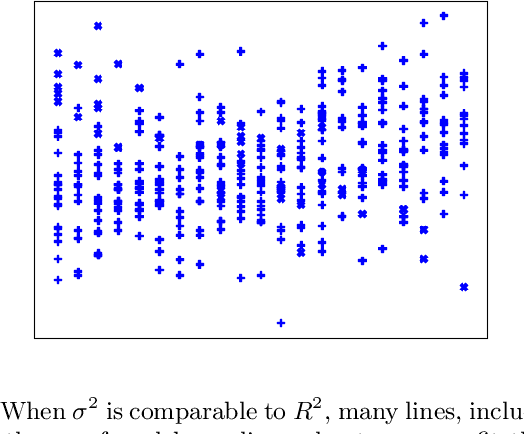

In this work we revisit two classic high-dimensional online learning problems, namely regression and linear contextual bandits, from the perspective of adversarial robustness. Existing works in algorithmic robust statistics make strong distributional assumptions that ensure that the input data is evenly spread out or comes from a nice generative model. Is it possible to achieve strong robustness guarantees even without distributional assumptions altogether, where the sequence of tasks we are asked to solve is adaptively and adversarially chosen? We answer this question in the affirmative for both regression and linear contextual bandits. In fact our algorithms succeed where convex surrogates fail in the sense that we show strong lower bounds categorically for the existing approaches. Our approach is based on a novel way to use the sum-of-squares hierarchy in online learning and in the absence of distributional assumptions. Moreover we give extensions of our main results to infinite dimensional settings where the feature vectors are represented implicitly via a kernel map.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge