Online Alternating Direction Method

Paper and Code

Jun 27, 2012

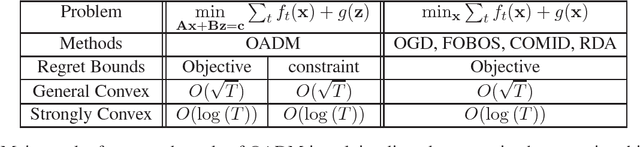

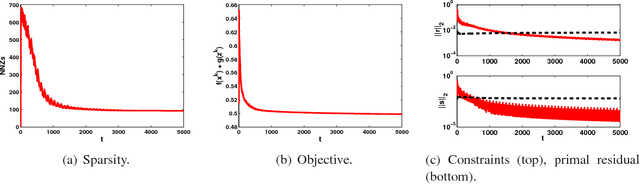

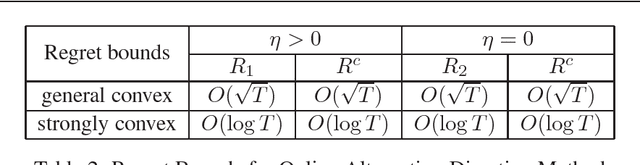

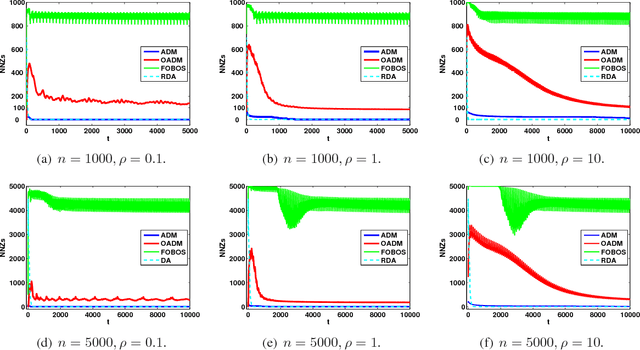

Online optimization has emerged as powerful tool in large scale optimization. In this paper, we introduce efficient online algorithms based on the alternating directions method (ADM). We introduce a new proof technique for ADM in the batch setting, which yields the O(1/T) convergence rate of ADM and forms the basis of regret analysis in the online setting. We consider two scenarios in the online setting, based on whether the solution needs to lie in the feasible set or not. In both settings, we establish regret bounds for both the objective function as well as constraint violation for general and strongly convex functions. Preliminary results are presented to illustrate the performance of the proposed algorithms.

* Appears in Proceedings of the 29th International Conference on

Machine Learning (ICML 2012)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge